1. Contact

Contact

To learn more about Intella Connect™, please contact us using the contact information below, or contact an Intella Channel Partner.

Vound

Office Phone

+1 888-291-7201

Email

sales@vound-software.com

Postal Address

10643 N Frank Lloyd Wright Blvd, Suite 101 Scottsdale, AZ 85259 U.S.A.

Sales Contacts

https://www.vound-software.com/about-us#partners

We will be pleased to provide additional information concerning Intella Connect and schedule a demonstration at your convenience.

To become an Intella Connect reseller, please contact us!

For user and technical support please visit our website: http://www.vound-software.com.

Vound Colorado (“Vound”).

© 2023 Vound. All rights reserved.

The information in this User Manual is subject to change without notice. Every effort has been made to ensure that the information in this manual is accurate. Vound is not responsible for printing or clerical errors.

VOUND PROVIDES THIS DOCUMENT “AS IS” WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED AND SHALL NOT BE LIABLE FOR TECHNICAL OR EDITORIAL ERRORS OR OMISSIONS CONTAINED HEREIN; NOR FOR INCIDENTAL OR CONSEQUENTIAL DAMAGES RESULTING FROM THE FURNISHING, PERFORMANCE, OR USE OF THIS MATERIAL.

Other company and product names mentioned herein are trademarks of their respective companies. It is the responsibility of the user to comply with all applicable copyright laws. Mention of third-party products is for informational purposes only and constitutes neither an endorsement nor a recommendation. Vound assumes no responsibility with regard to the performance or use of these products. Under the copyright laws, this manual may not be copied, in whole or in part, without the written consent of Vound. Your rights to the software are governed by the accompanying software license agreement. The Vound logo is a trademark of Vound. Use of the Vound logo for commercial purposes without the prior written consent of Vound may constitute trademark infringement and unfair competition in violation of federal and state laws.

All rights reserved by Vound. Intella and Intella Connect are trademarks of Vound.

2. An introduction to Intella Connect

Intella Connect is a web-based investigation and eDiscovery tool. It is ideally suited for use by enterprise, law enforcement and regulatory agencies in civil, criminal or policy-related investigations. It allows you to share any case that has been made with Intella 100, Intella 250, Intella Professional (Pro) or previous version of Intella TEAM Manager. The case can then be reviewed using any of the supported web browsers.

Cases can also be created directly in Intella Connect and its sources can be indexed using Intella Node. If a case already exists, it is however not required to have Intella Node in order to share such case.

Intella Connect’s unique visual presentation will let you quickly and easily search and review email and electronically stored information to find critical evidence and visualize relevant relationships. The birds-eye view helps you gain insight in information that is available on combinations of keywords. In each step of your search it shows the number of emails or files that match your search (and of course a link to the e-mails and files themselves) so that you can effectively zoom in to find what you are looking for.

With Intella Connect, you can…

-

Gain deeper insight through visualizations.

-

Search email, attachments, archives, headers, and metadata.

-

Drill deeply into the data using Intella Connect’s unique facets.

-

Group and trace email conversations.

-

Preview, cull, and deduplicate email and data.

-

Export results.

2.1. Supported web browsers

-

Google Chrome (most recent version)

-

Mozilla Firefox (most recent version)

-

Microsoft Edge

|

As Microsoft has officially announced ending support for Internet Explorer, we are no longer supporting it as well. |

|

Warning

Google Chrome and MS Edge will not delete session cookies after they are closed. That means that logged in user will not be logged out. With this in mind it’s always best to log out manually when you finish using Intella Connect. |

2.2. Feedback

We take great care in providing our customers with a pleasant experience, and therefore greatly value your feedback. You can contact us through the form on http://support.vound-software.com/ or by mailing to one of the email addresses on the Contact page.

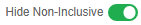

3. Working with Intella Connect

3.1. Getting started

In order to start the review of a case, the first step is to visit the User Dashboard. The link and user credentials to access the dashboard should be provided to you by the Intella Connect administrator.

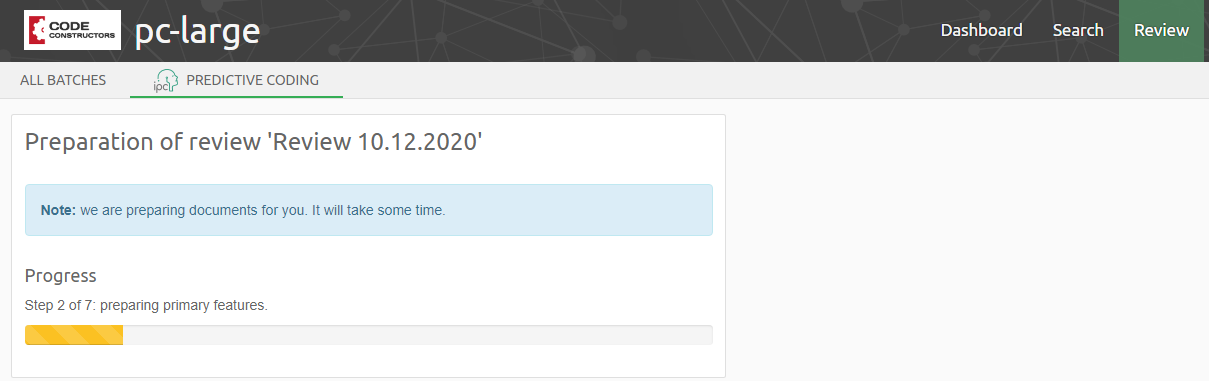

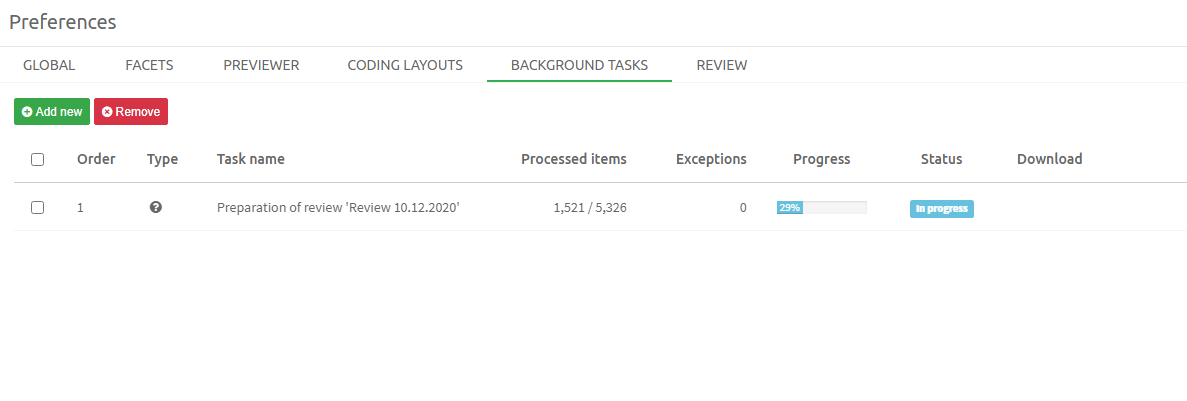

After logging in, the following screen will be shown:

For a better user experience it is suggested to change the default profile image with a custom image.

This image will be displayed in the Activity Streams, Comments and User Management pages.

The default profile image can be changed by selecting the Change avatar picture option available in the menu located in the upper right corner.

Currently supported image formats are PNG and JPG.

The recommended minimum size of a profile image is 128 by 128 pixels.

If the ratio of the uploaded image is not 1:1, it is cropped as showed on the figure below:

![]()

Under the profile image, there is a list of cases which are currently assigned to you and available for review. Click on a desired case to access the Intella Connect case reviewing interface.

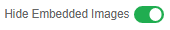

- The options icon

allows user to change

allows user to change -

-

the avatar picture

-

the password

-

two-factor authentication settings

-

or log out from Intella Connect:

![]()

Change avatar picture

Changing the avatar Image is straightforward - select Change avatar picture from the menu.

After dialog is shown press Browse button and select the picture (png/jpg) you want to use as your avatar.

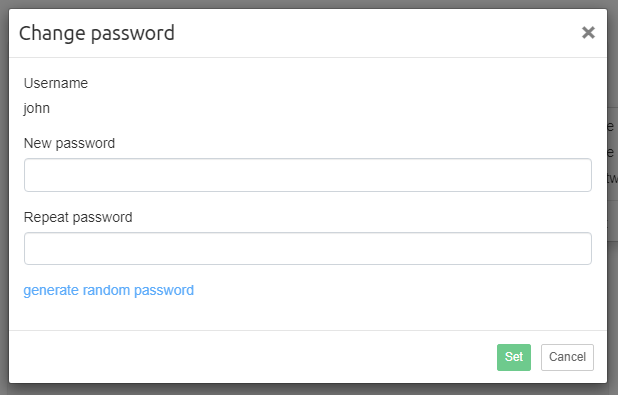

Change password

To change password, select Change password from the menu.

Once the password fields are filled in, click on the Set button to change the user’s password.

After changing the password, you will be asked to log in again with a new password.

|

Passwords can be generated by clicking on the |

Setup two-factor authentication

The feature of two-factor authentication (2FA) for local Intella Connect users has been added in version 2.4.2.

With 2FA, an extra security layer is added to the user account. After setting it up correctly, when logging in to Intella Connect , then the user will be asked to login in two steps:

-

first with username and password

-

second with time-based one-time password (TOTP) generated on phone or another device

Due to the second step being time-based, it is required for the server on which Intella Connect is running as well as a device on which authenticator is running to have system clocks synchronized.

Please make sure to enable "Set time automatically" option on Windows so that the system clock is synchronized with the internet time server.

The same applies to phones and other devices on which authenticator is running.

To set up two-factor authentication, select Setup two-factor authentication from the menu.

This will open a modal window which shows the current status of 2FA and allows to enable or disable it.

By default, the 2FA is disabled as is shown when opening the modal window for the first time:

To start the process of enabling the 2FA, click on Enable 2FA button. This will activate TOTP generation on Intella

Connect

side for the user.

At this point, 2FA is still disabled and further steps are required:

To finish 2FA setup, do the following:

-

If authenticator is not installed on device, then install authenticator application on device using built-in application store (Google Play for Android devices and App Store for Apple devices). Google Authenticator or Microsoft Authenticator are recommended. In the authenticator application on phone, add a new entry in one of two ways:

-

Choose to scan the QR code with your device’s camera to add the entry automatically.

-

Enter the details provided to add the entry manually.

-

-

Enter the six digit number from your device to the field in modal window and click

Verify credentialsbutton.

If the six digit number from your device will match the six digit number generated in Intella Connect , then 2FA will be enabled for this user.

The modal window can now be closed. When logging in, you will be prompted to enter username and password as well as a six digit number generated by your authenticator.

It is recommended to use 2FA to strengthen security of all local user accounts.

To disable 2FA, enter current password and the six digit number from your device and then click on Disable 2FA button.

If there are unexpected issues with 2FA and it cannot be disabled by the user, then it is suggested to contact Intella Connect administrator, who can follow troubleshooting steps in administrator’s section of this user manual.

3.2. Overview of the case reviewing interface

The first page that will be shown to user, which has successfully logged in and has been granted access to this case is the Dashboard view.

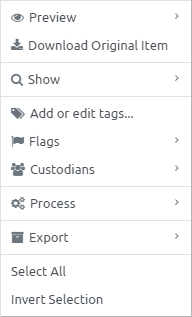

4. Views

The case review interface consists of five primary views that the user can choose between:

-

Dashboard view (default) - allows user to get an overview of the case.

-

Search view - used for searching, exploring, investigating data and reviewing results.

-

Review view - allows for a simplified, cost-effective review of grouped items.

-

Exports view - for managing exported results.

-

Report view - used for generating reports, such as detailed statistics about the keywords in a keyword list or user activity on a case.

The primary view can be selected in the top bar:

![]()

|

Views are shown based on whether the current user has sufficient permissions, so it might happen that not all of them will be shown. |

The top bar also shows the name of the currently logged user along with his avatar. The available dropdown menu allows going back to the user dashboard, or signing out of Intella Connect.

The lower bar represents Secondary Navigation and is split into two parts. Tabs on the left allow getting into a more detailed sub-view of the main view.

Action icons on the right allow for access to:

Dashboard view

The Dashboard view is the default view that Intella Connect will display when the case is opened. It allows the user to see an overview of the case, particularly the kinds of data which are contained in it, overall progress of the investigation as well as individual activities of other investigators.

Dashboard view interface consists of the following widgets:

-

Data overview - shows data sources that have been indexed, types of items and top 10 email addresses in this case.

-

Tags - shows all tags that have been applied to items or, when a particular tag is selected, the items tagged per user.

-

Progress - shows how many items have been previewed, tagged, redacted, flagged or exported.

-

Reviewers - shows reviewers that are participating in investigation of this case. Reviewers that are currently logged in are shown as active.

-

My Work - shows the actions performed by current user.

-

Activities - contains records related to user activities like viewed items, tagging, flagging, exporting, etc.

-

Alert box - shows notifications regarding the case and the data contained in it, such as exceptions or the existence of encrypted items.

|

Widgets can be reordered and resized to accommodate user’s needs. |

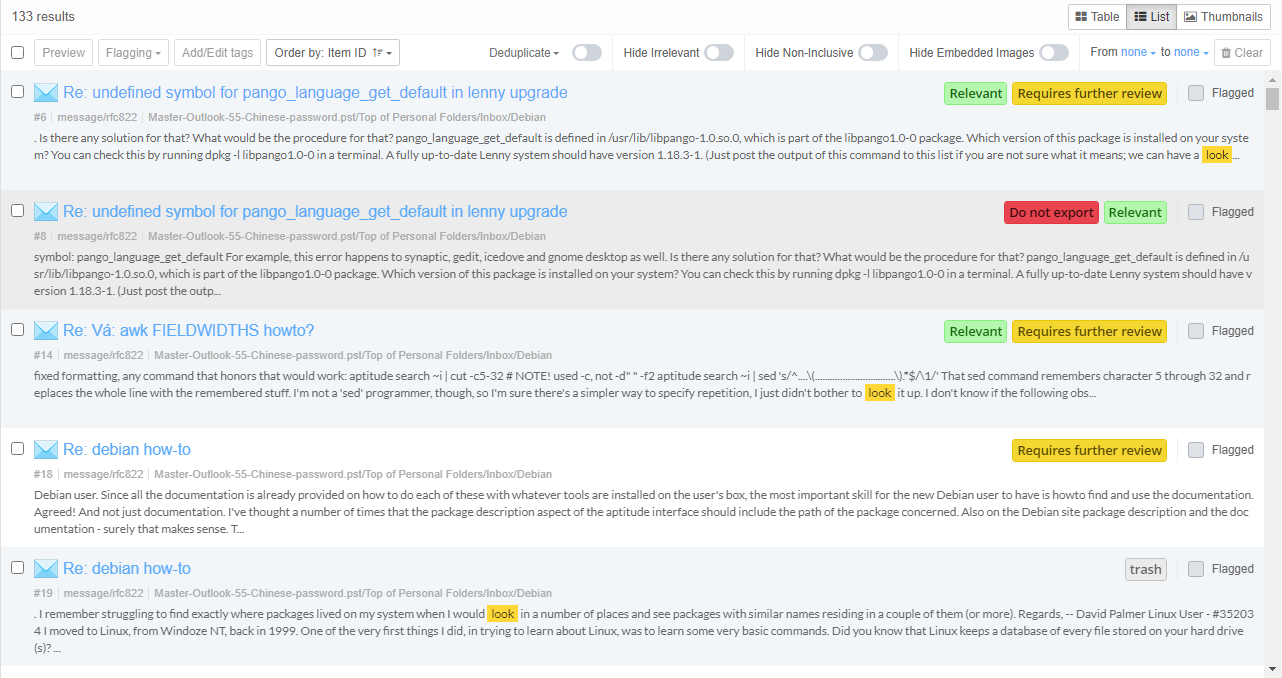

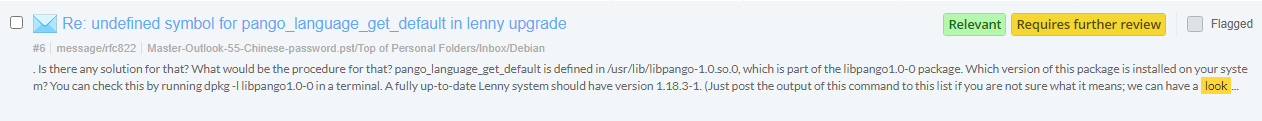

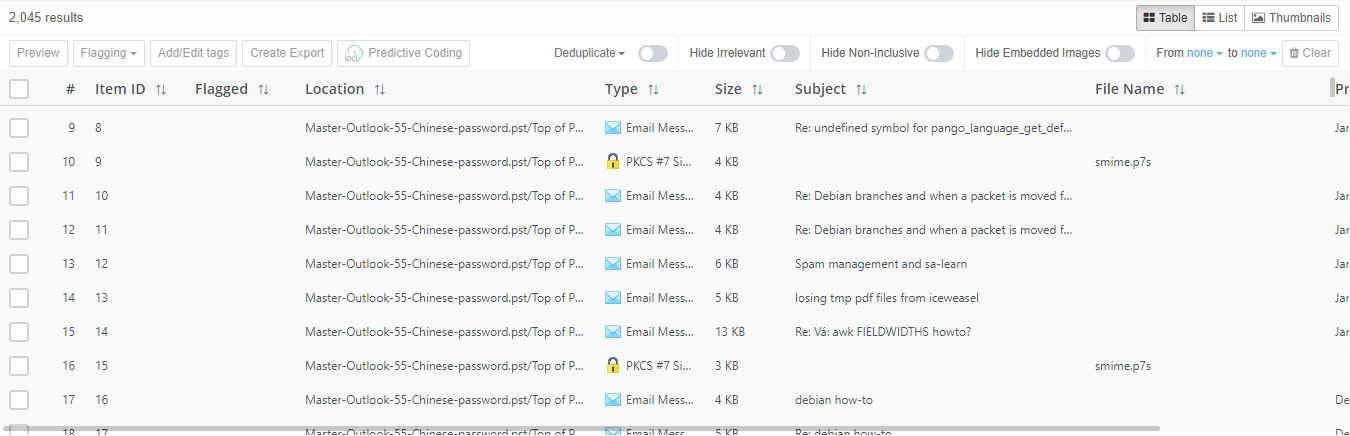

Search view

The Search view is usually the place where most of the work will commence. It allows users to search and explore items held in a case, find the ones matching user’s investigation needs and further review them. It offers a few different sub-views, each tailored to accommodate different kinds of investigation needs.

Insight sub-view

The Insight view shows notable aspects of the indexed evidence files and possible next steps to take. The overview given here can help an investigator get a grasp of the case’s contents, such as the encountered item types and their volumes, date ranges, web browser activity, etc. This will help formulate follow-up questions for further research. Most elements in this view can be clicked or double-clicked, which adds a search in the Search sub-view or opens the corresponding item in the Previewer.

|

Widgets can be reordered and resized to accommodate user’s needs. |

To learn more about this view please refer to the Insight view section.

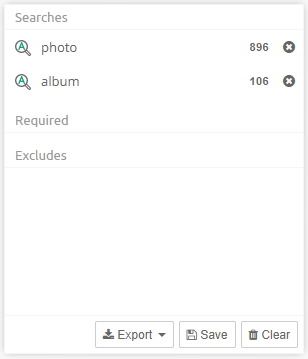

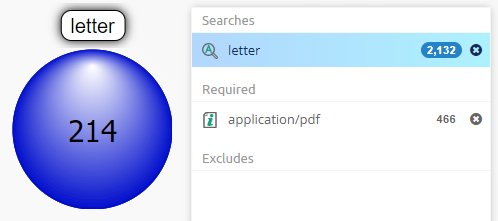

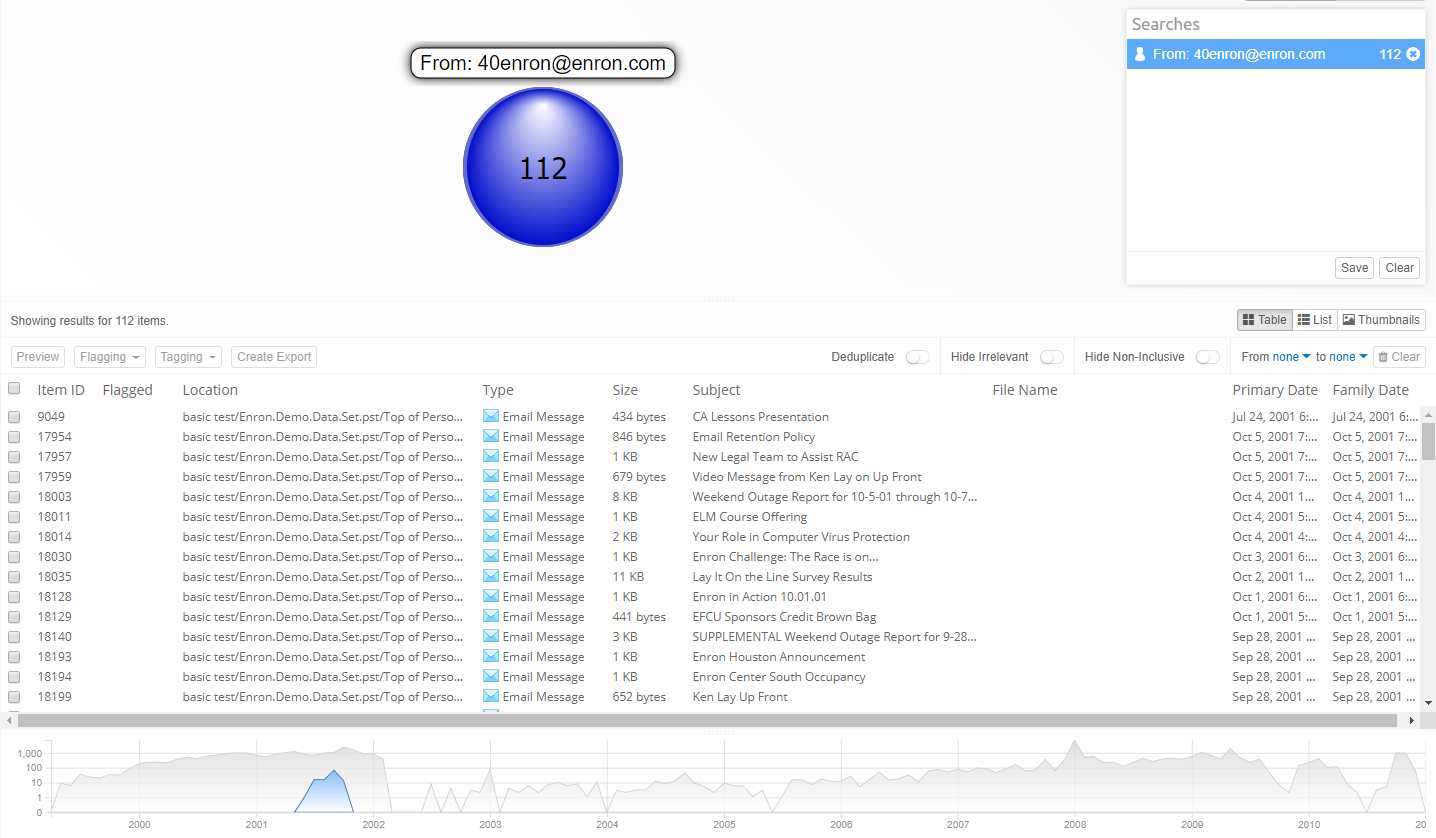

Search sub-view

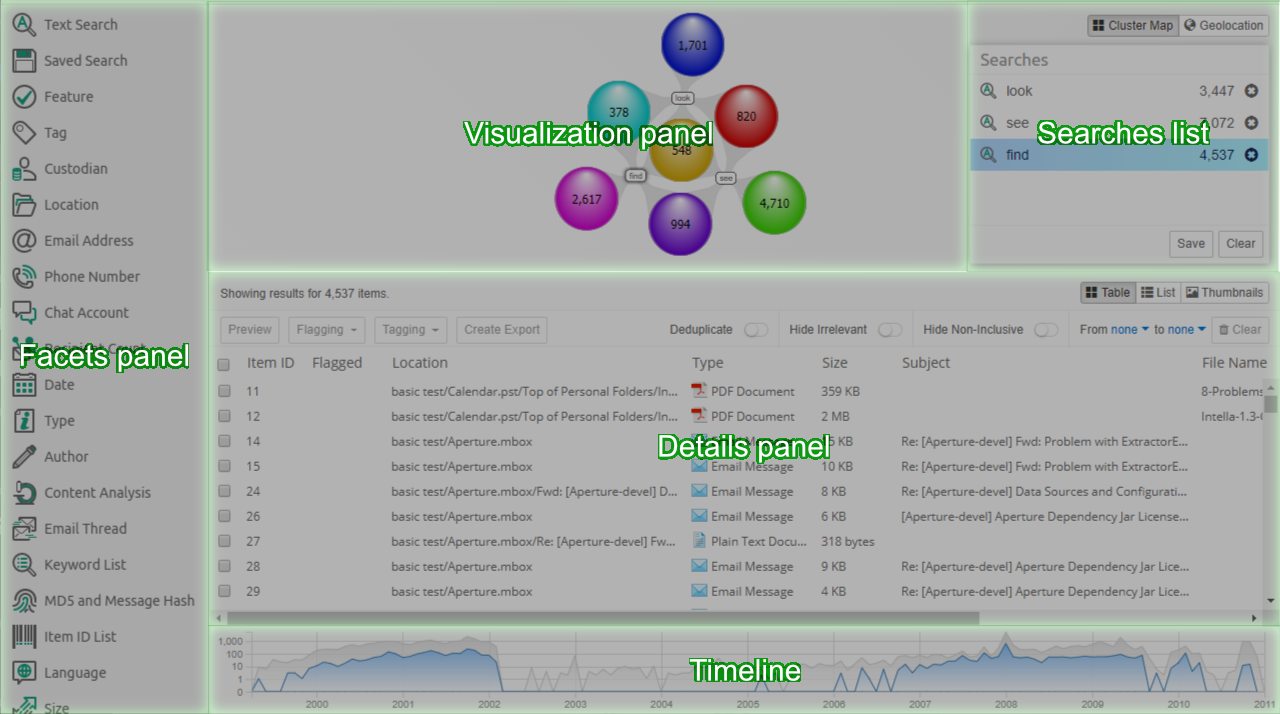

Search view interface consists of the following panels:

-

Facets panel - shows a list of facets for searching and filtering results.

-

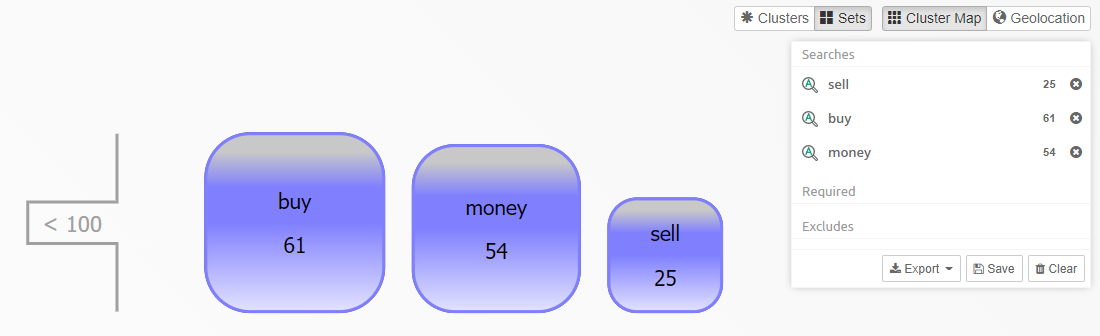

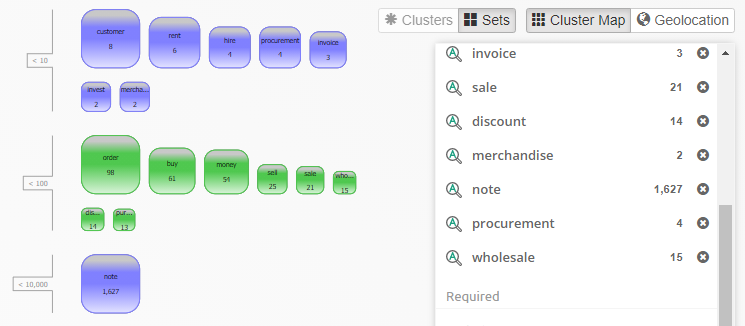

Visualization panel - shows how search results are connected to parts of the query.

-

Searches list - shows user’s queries.

-

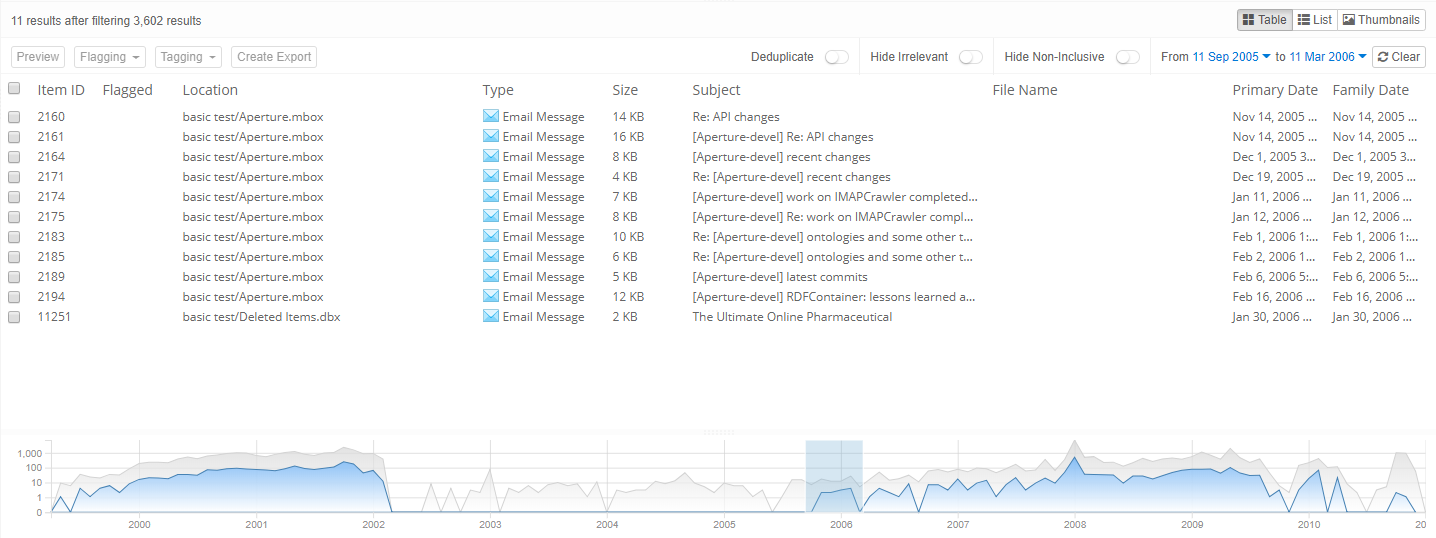

Details panel - shows a list, table or thumbnail view of the results in a selected cluster.

-

Timeline - Optionally, timeline depicting distribution of items over time is shown at the bottom, see Timeline section.

When Intella Connect opens the case for the first time, the Cluster Map, Selections and Details panels will all be empty. The investigative work can be started by using one of the available Facets.

|

Panel can be resized by dragging the edge between panels. This way users can adjust the sizes of panels the way that best suits their needs. |

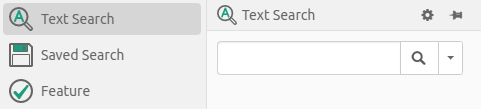

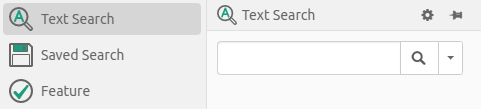

The first facet is a text search facet which consists of:

-

Search text field - to search for text, enter a query in this text field and click the Search button.

-

Search button - to evaluate the currently entered query.

-

Search drop-down button - the text that is being searched for can also be required and excluded. This allows for filtering items on the text without these queries appearing as individual result sets in the Cluster Map visualization. Clicking on gear icon will show additional options which allow limiting keyword searching to specific item parts or attributes.

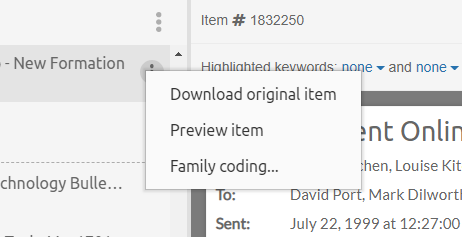

The right part of the Images view is basically a thumbnails view along with action options. Each item can be right-clicked to show a list of options.

Review view

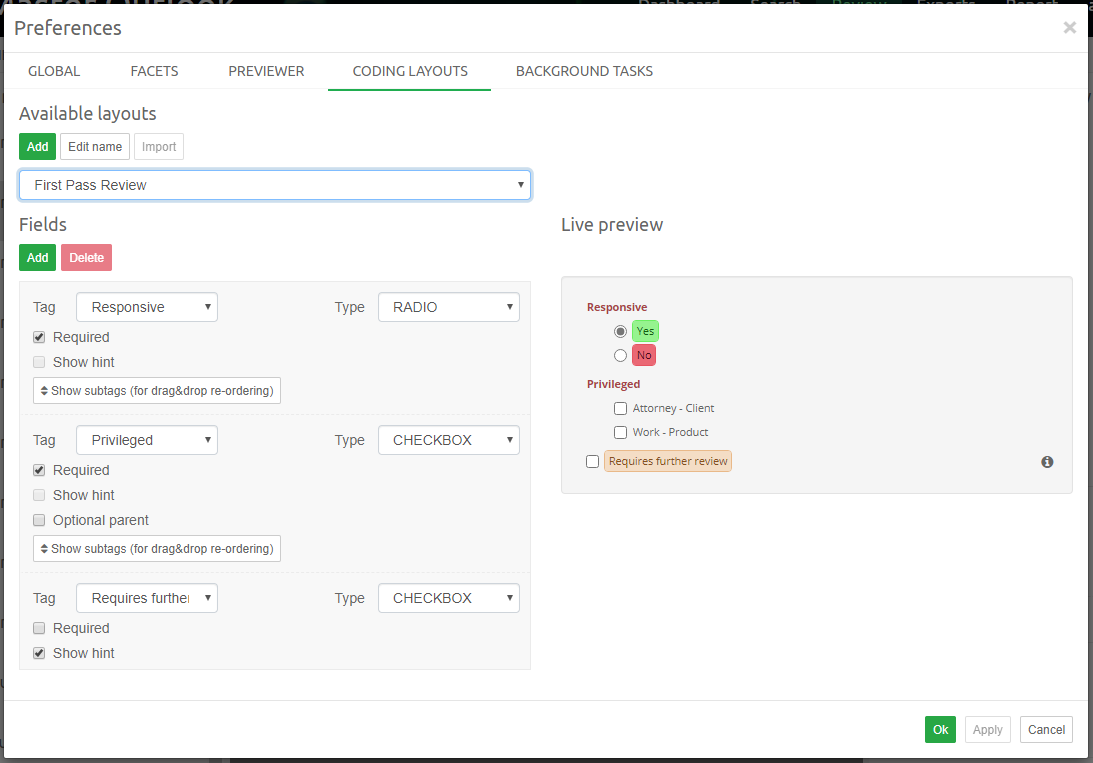

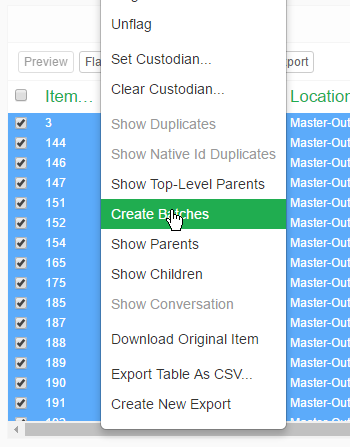

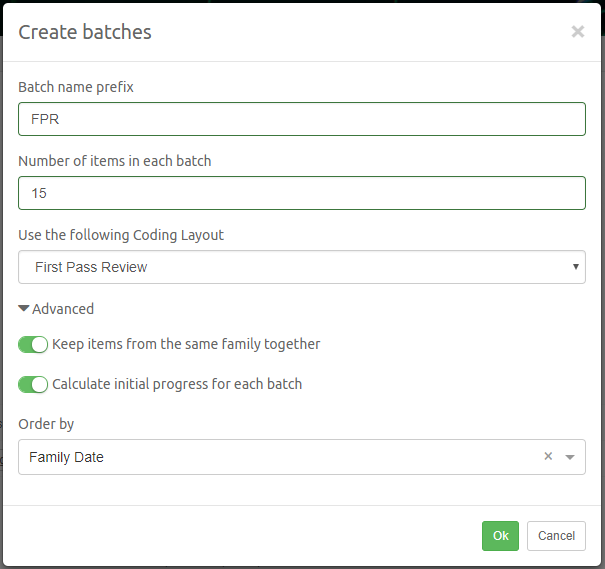

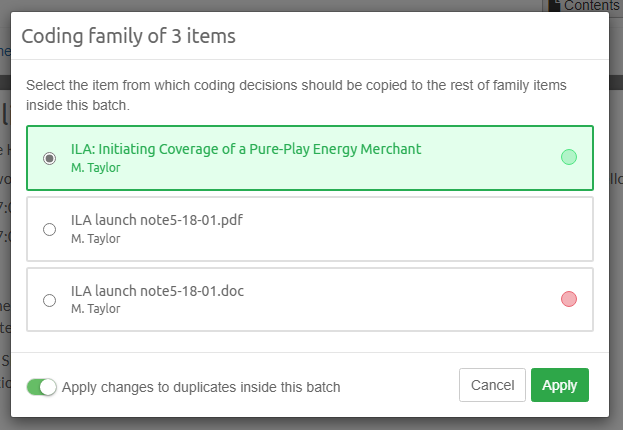

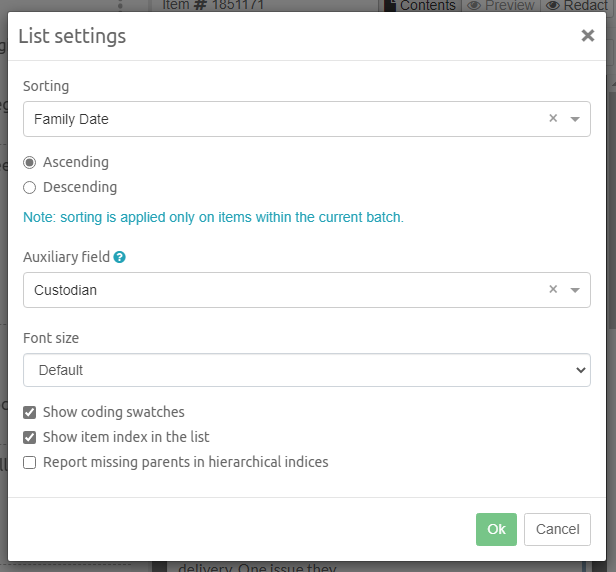

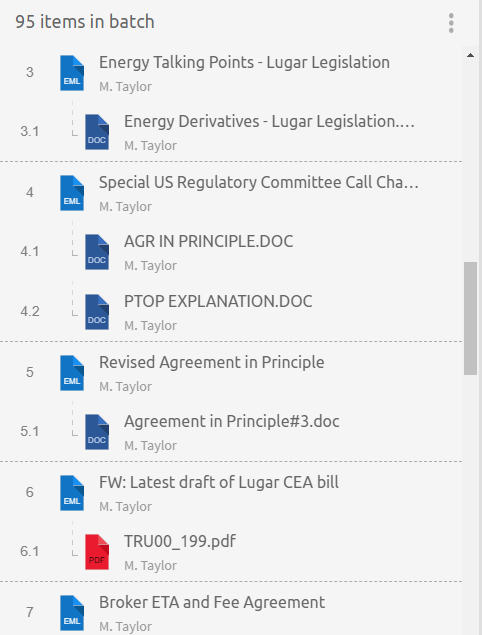

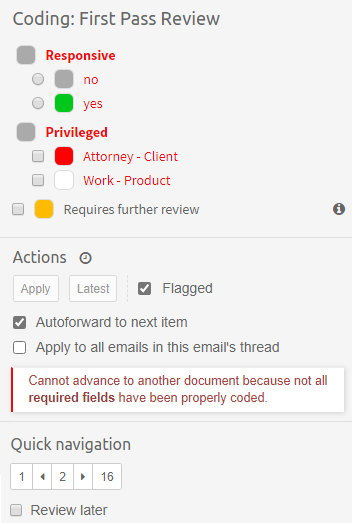

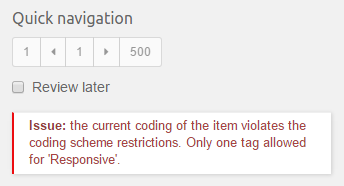

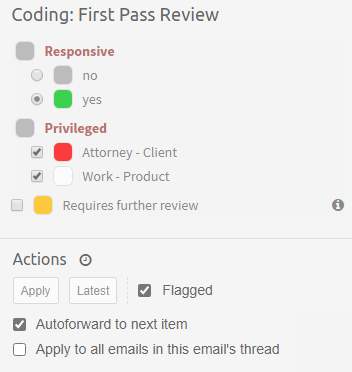

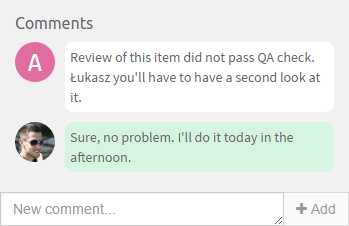

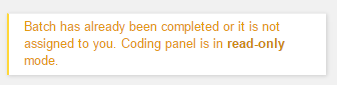

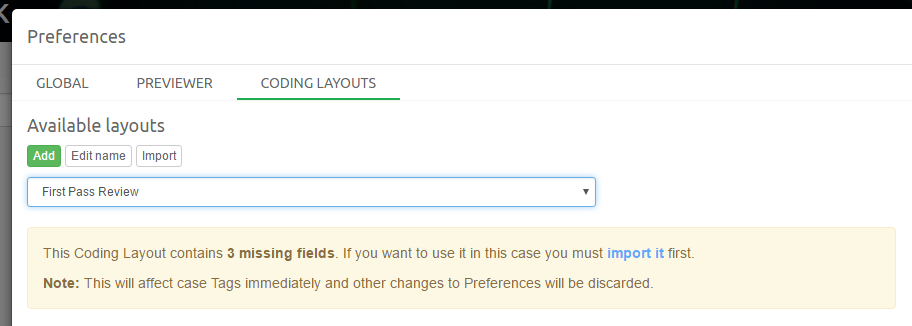

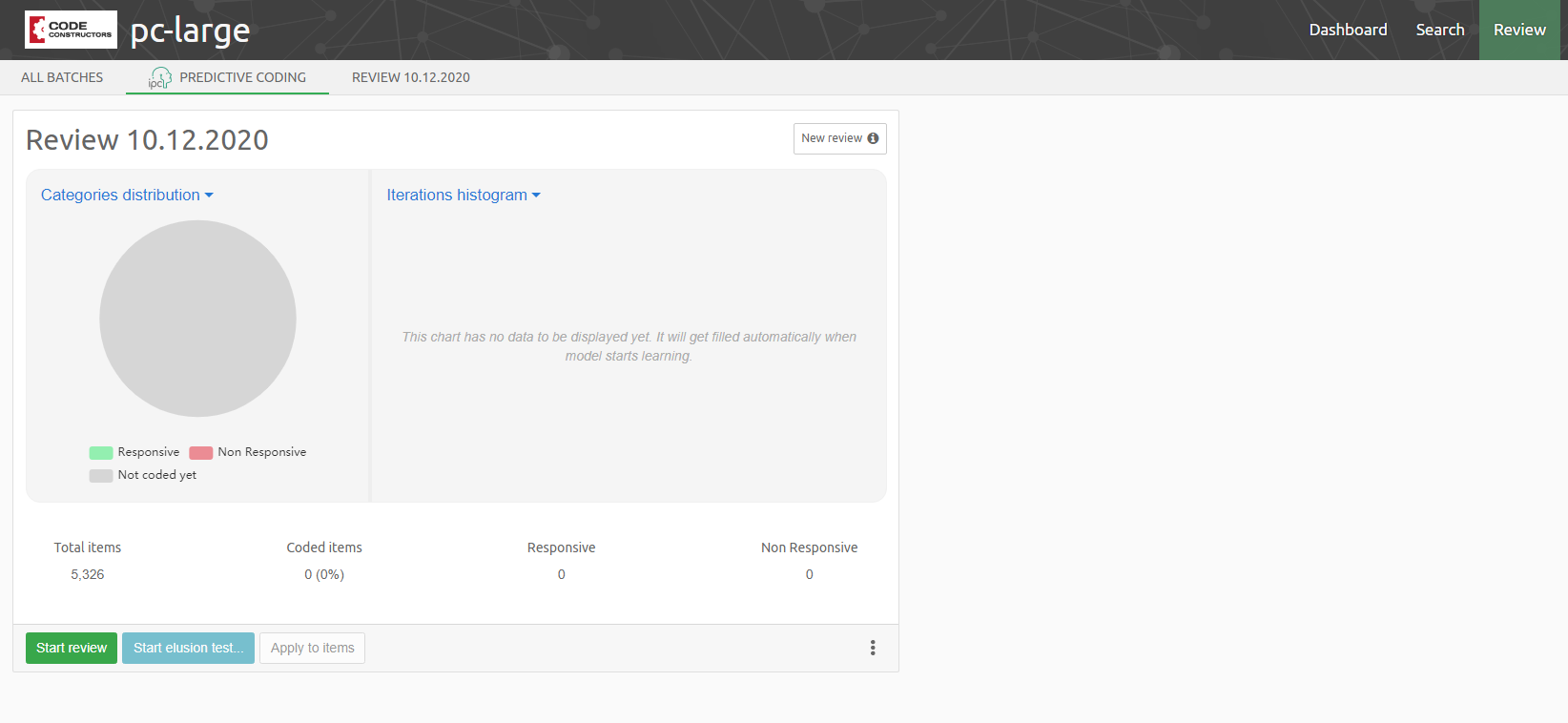

Intella Connect 1.9.1 introduced the new concept of "Batching and Coding" which greatly aids the workflow of a linear review of documents, making it a very easy and effective process. Coding is a process of applying taggings to items, guarded by user defined rules. A batch is simply a set of items grouped together, supplemented with some additional metadata (like name and status), which makes it very easy for users to refer to a particular subset of items in a case. It also helps to track which items have been coded in a context of a given batch.

To learn more about these new features please refer to the Batching and Coding section.

Review View was designed to be a self-contained tool which allows users to stay focused while working on a linear review task. It consists of two kinds of subviews:

-

Batches List View

-

Coding View

Those subviews are accessible from the Secondary Navigation Bar as tabs (links) located on the left. The first tab is called "All batches" and it always points to the Batches List View. The presence of other tabs depends on the fact if currently logged in user has any batches assigned to him. If yes, then new tabs will be added to the Secondary Navigation Bar. In cases where large number of batches is assigned to the user some of tabs will be grouped together in a form of a drop-down list.

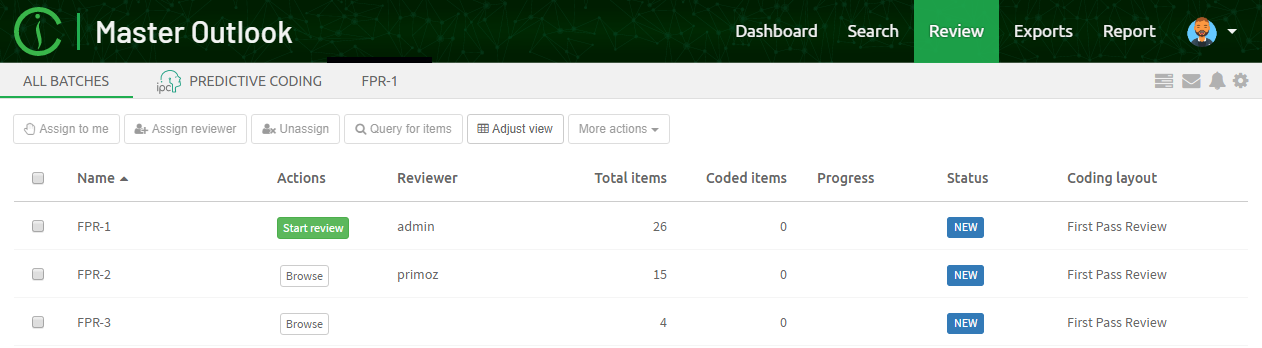

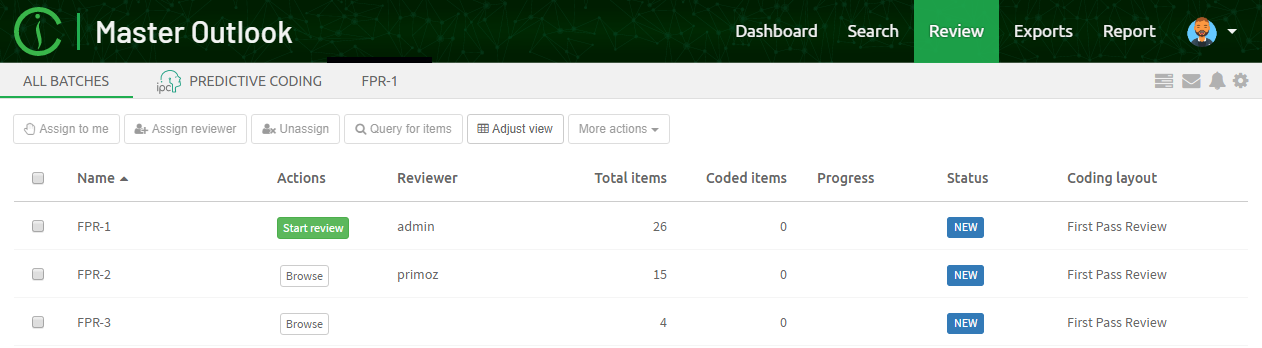

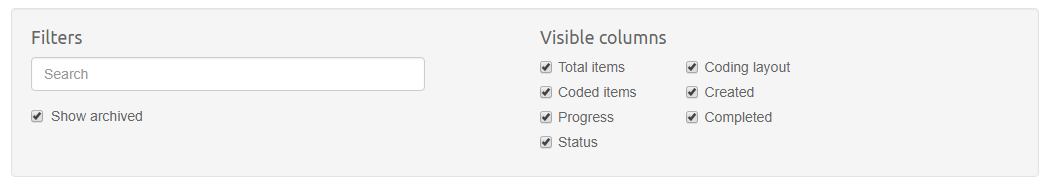

Batches List View

This subview offers a good overview of batches created in this case. It also the view selected by default when users clicks on the "Review" button in the main navigation.

Batches are organized in a form of a table. Each column is sortable by clicking on its name. The first column allows user to select one or multiple rows. Please note that user may also use CTRL or SHIFT buttons while selecting more, to easily select more batches at once.

Please refer to the Batching and Coding section for more information about batches.

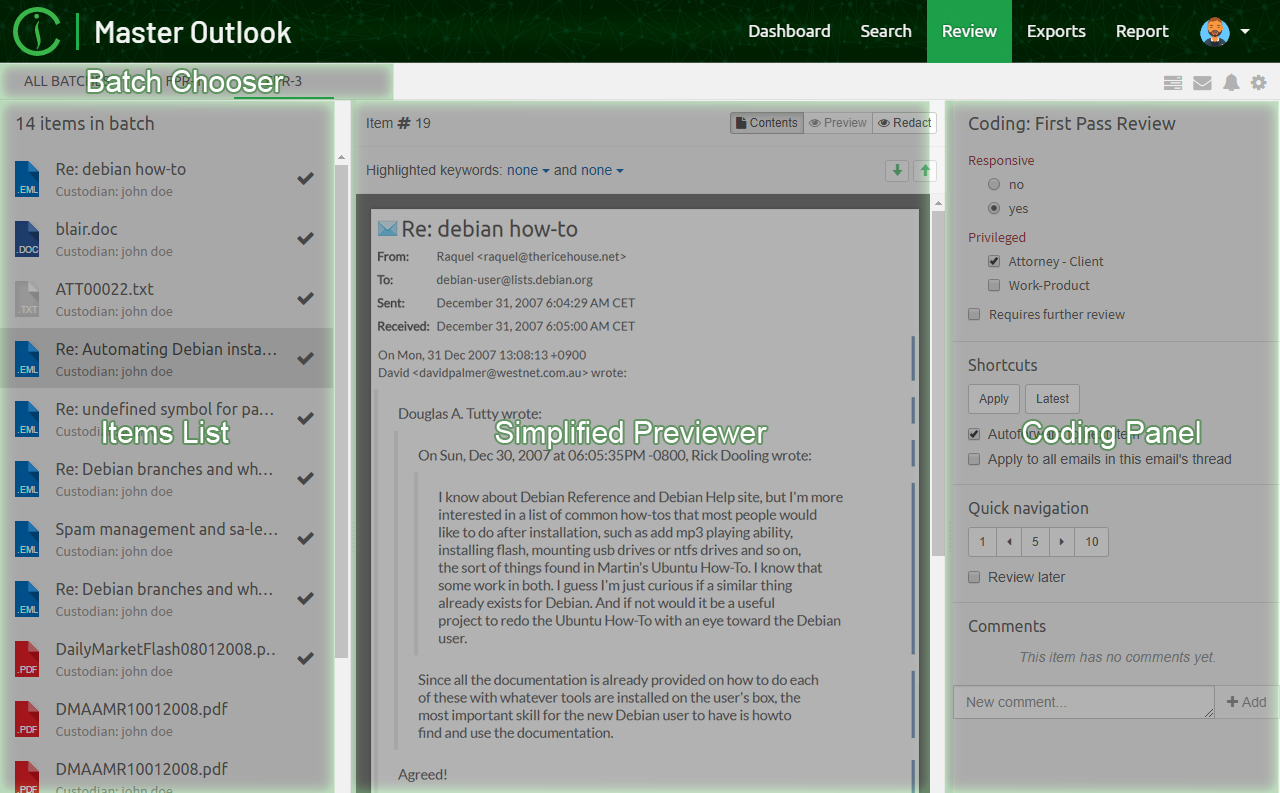

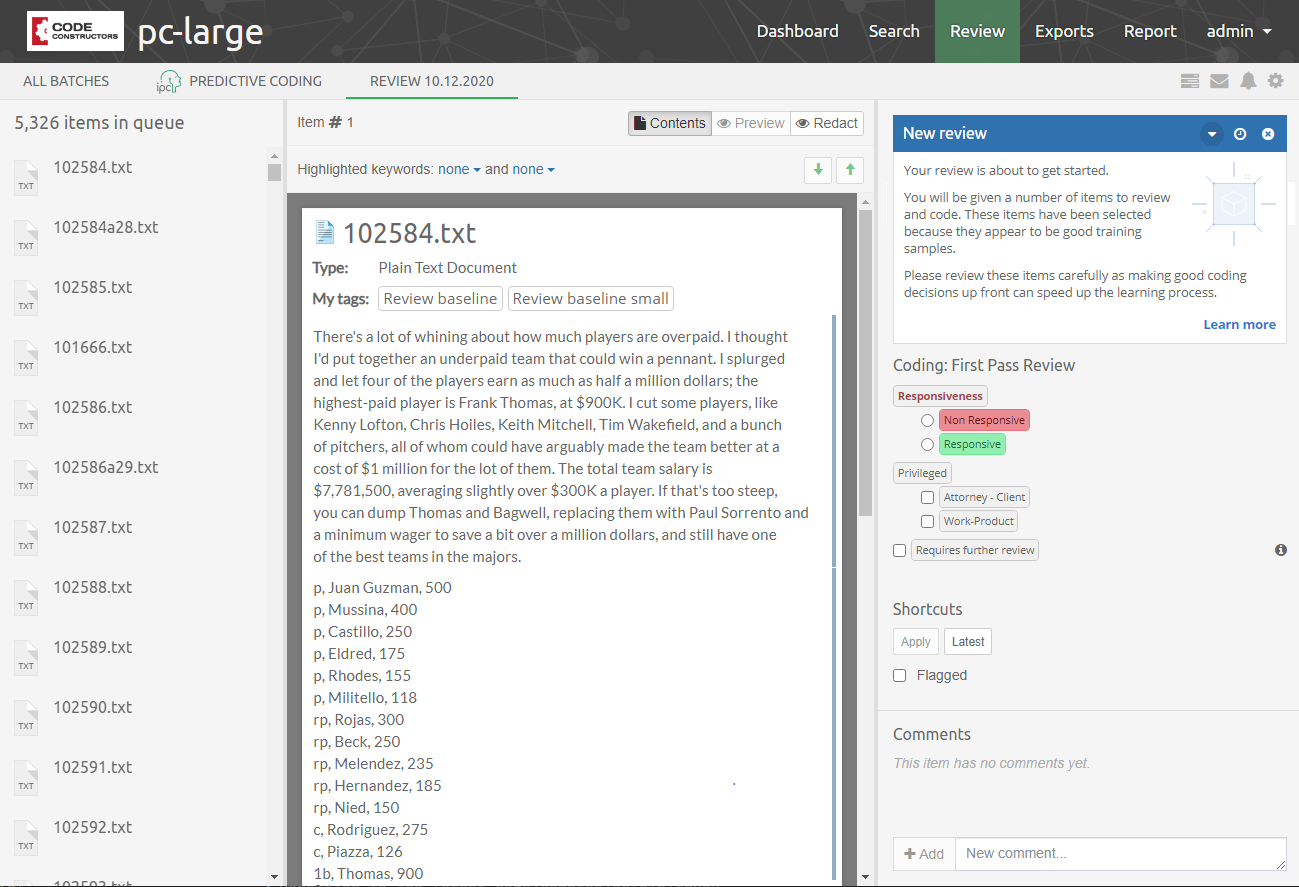

Coding View

The second subview of the Review UI is the Coding View presented below.

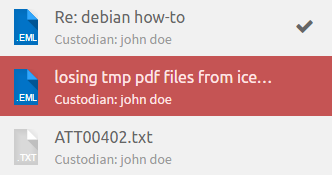

It consists of the three main UI parts:

-

Items List - which lists all items that are a part of the batch being currently browsed.

-

Simplified Previewer - which is a simplified counterpart of our standalone Previewer

-

Coding Panel - allowing users to apply a coding decision to the active item.

It’s important to understand that these tree components are closely tied together. The list on the left gives a nice overview of what kind of items this batch contains, and which one is currently reviewed (active). It also allows to quickly jump between items and it reflects the state of the coding for each item individually. Simplified Previewer makes it easy to evaluate the contents of the active item, see its native Preview (if the current item supports it) or redact it. The Coding Panel reflects the state of coding in the context of the active item.

Each of these components is described in more detail in the Batching and Coding section.

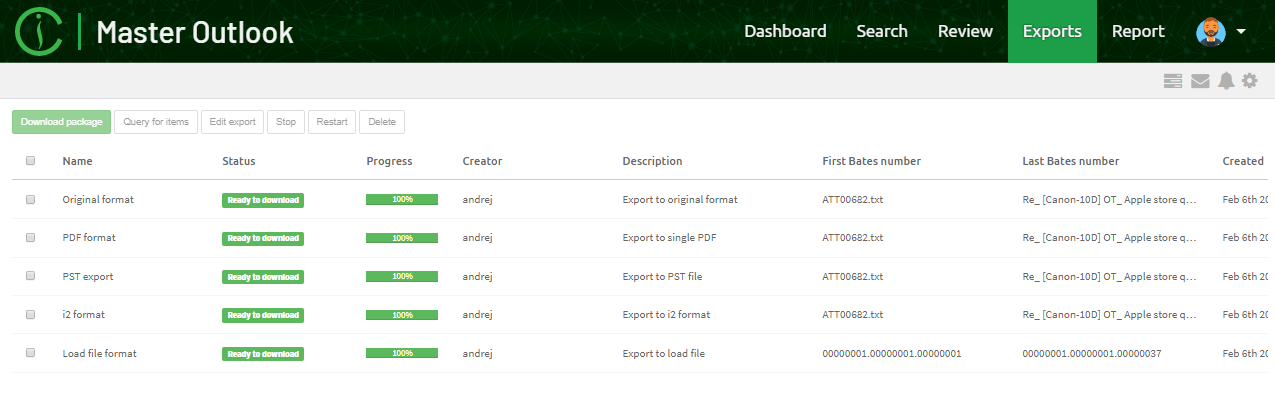

Exports view

Initially, the Export view does not have any export packages. After exporting a collection of search results, the export package will appear in the Export view.

Selecting an export package shows the contents of the export package and additionally allows you to do the following actions:

-

Download package - downloads the export package as a ZIP file into your browser’s download folder.

-

Query for items - creates a query of all items contained in selected export package and adds it to searches list so that they can be reviewed in Search view.

-

Edit export - allows changing the export package name and description.

-

Stop - cancels creation of the export package.

-

Restart - restarts creation of the export package.

-

Delete - removes the export package.

Report view

The Report view is used for generating reports, such as detailed statistics about the keywords in a keyword list or user activity on a case.

It consists of the following subviews:

-

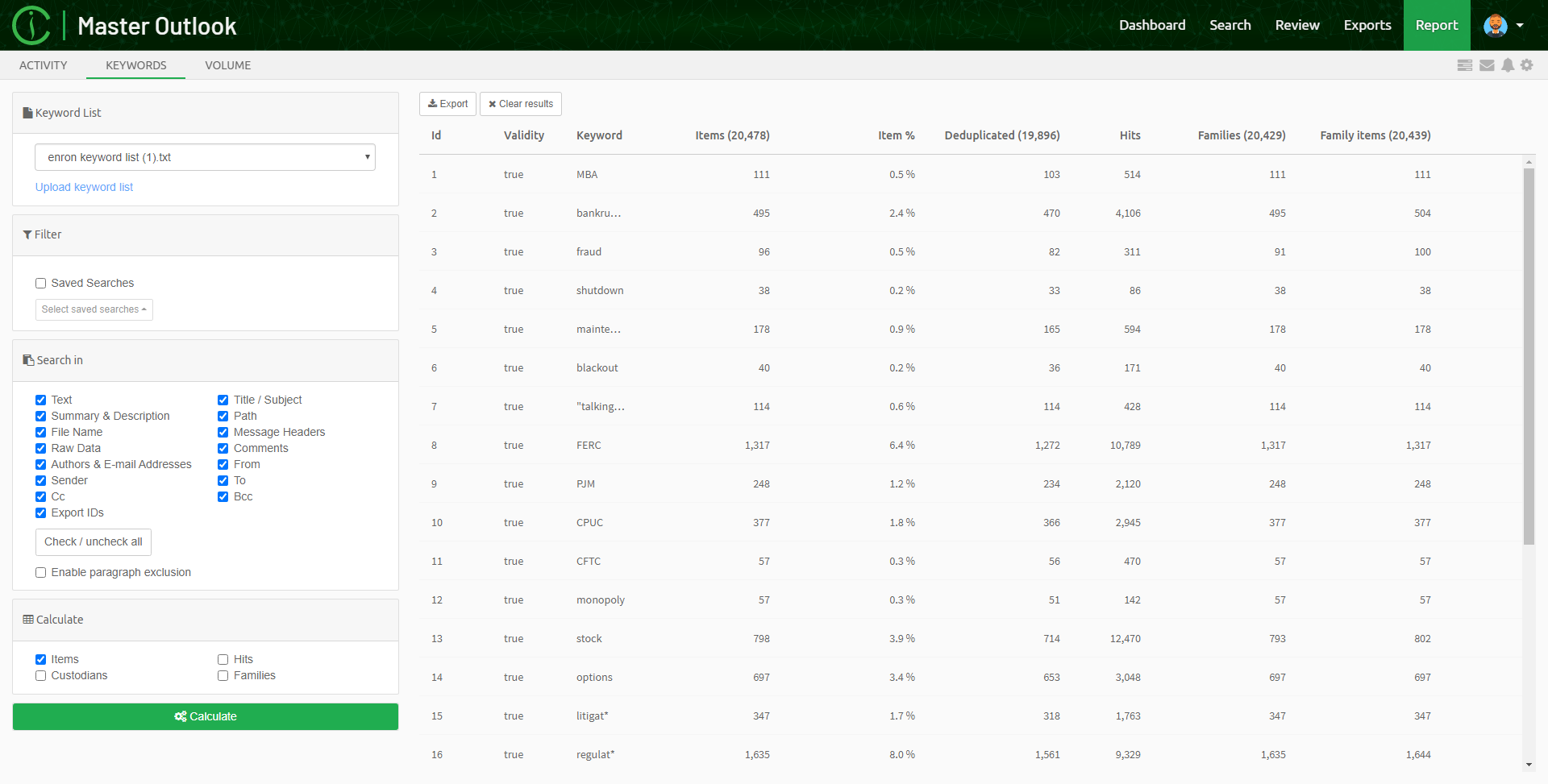

Keyword view, which gives detailed statistics about the keywords in a keyword list.

-

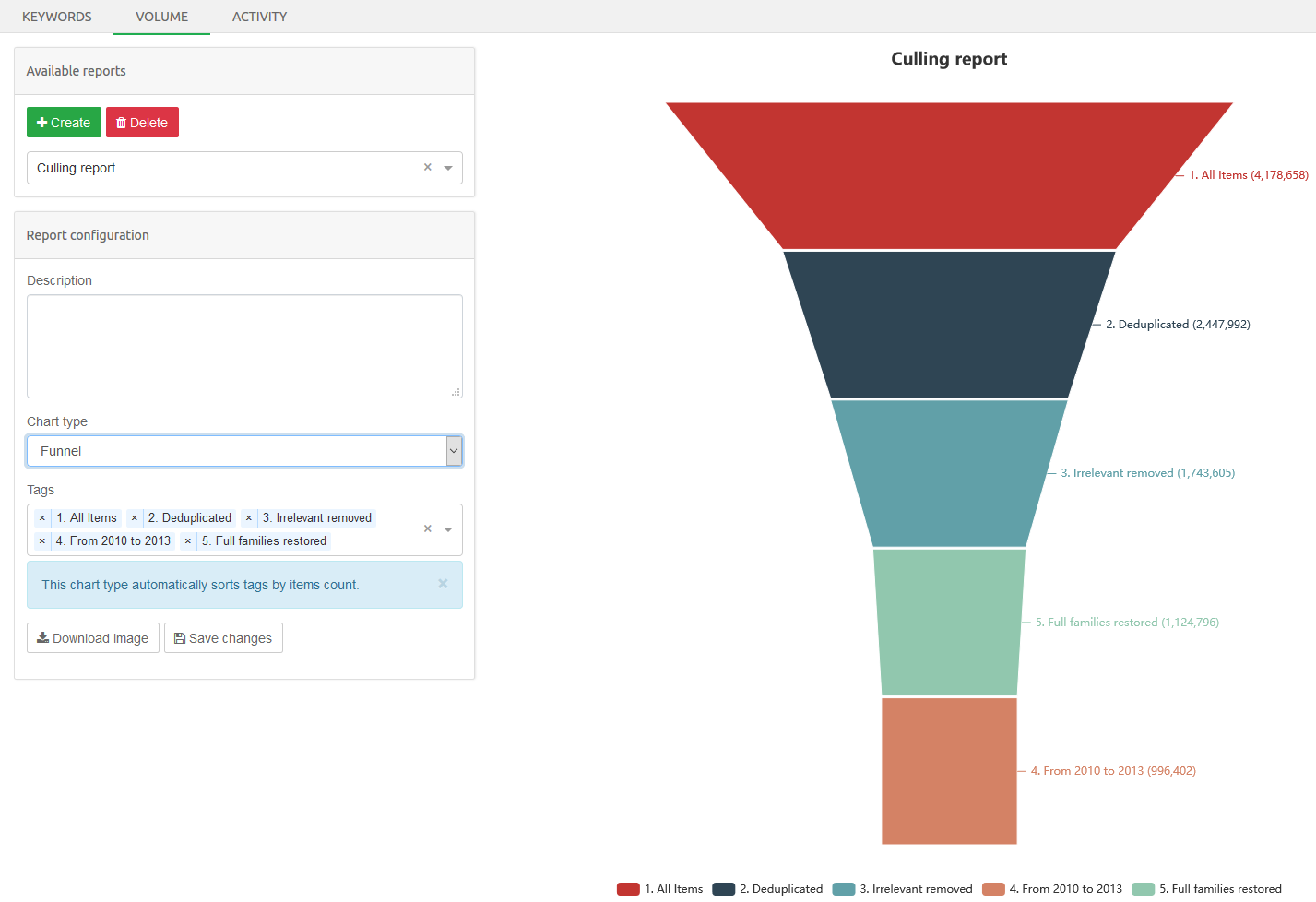

Volume view, which gives view about how tags relate to each other in a context of a case.

-

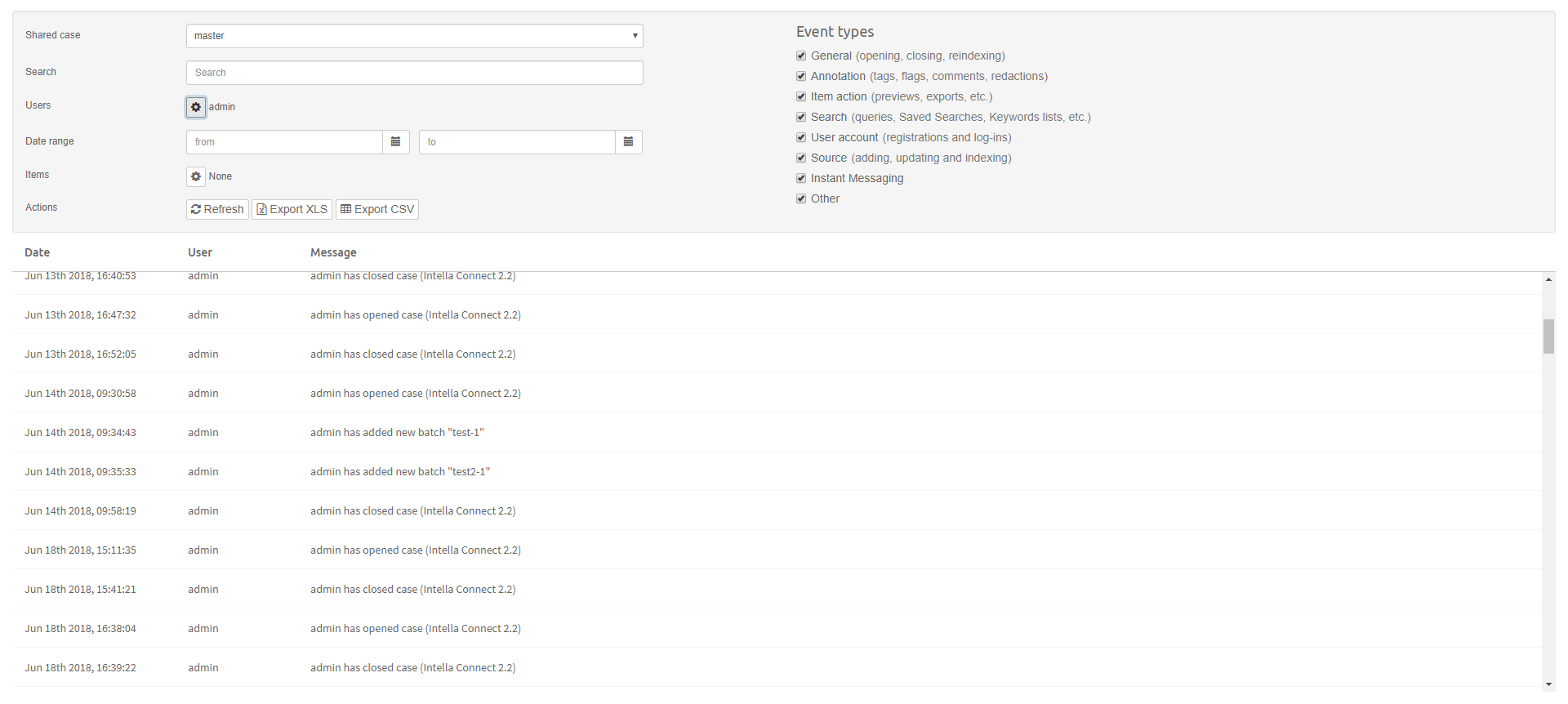

Activity view, which gives detailed view about the activities performed by users logged into a case.

Default subview is the Keywords view.

5. Insight view

The Insight tab contains a number of information panels that together give a concise overview of the information inside the case, revealing suspect behavior and giving rise to follow-up investigative questions.

The information is extracted from a variety of sources, such as emails and documents, web browser histories, Windows registries and more.

Clicking on entries like a document type or custodian name in the Insight tab will add a relevant search for that item category to the Cluster Map in the Search view. The main window will then automatically switch to the Search view as well.

|

The boxes in the Insight section and the Case section can now be reordered and resized to accommodate a user’s needs and display. |

5.1. Case info

Case info section shows the basic case information such as the case folder, case size, creation date, etc.

5.2. Evidence

The Evidence section shows important global statistics regarding your data. A detailed description of each category can be found in the Facet panel explaining the Features facet.

5.3. Types

The Types section shows a breakdown of the different types of files and other items in the case. It shows the same hierarchical structure as the Type facet described in Facet panel .

5.4. Custodians

The Custodians section shows the list of custodians in the case, if any, together with the number of items that are assigned to them. A pie chart showing these amounts is shown to the right of the table.

For detailed information on how to define custodians see the section titled "Custodians" in Facet panel .

5.5. GDPR

The GDPR section gives an overview of privacy-sensitive information encountered in the case. Examples of such information are person names, email addresses, phone numbers and other communication handles, credit card numbers, etc. Such information is important from a GDPR compliancy perspective, or similar legal frameworks in use around the world.

For each category of personally identifiable information (PII), the number of values found is listed. These values can be exported to a CSV file. Furthermore, the number of items that contain at least one of these values is listed. This amount is further split up in Documents, Emails, and Other categories.

The PII categories are split into two groups, based on whether the PII was found in the document/email body or in the metadata.

Click on a link in the first column to switch to the Search tab and see the items involved in that category.

Some categories are determined during indexing, yet some other categories may require Content Analysis to be run first.

5.6. Internet Artifacts

The Internet Artifacts section contains information about web browser activity, based on the browser histories detected in the evidence data.

All major browsers are supported: MS Internet Explorer/Edge, Mozilla Firefox, Google Chrome and Apple Safari.

The top chart shows the list of encountered browser histories, listing the following information:

-

The path of the browser history in the evidence data.

-

The type of browser, represented by the browser’s desktop icon.

-

The number of visited URLs in the browser history, both as a number and as a bar showing the amount relative to the total amount of visited URLs in the entire case.

-

The last used date of the browser history, i.e. the last time a new URL was added or a visit count was updated. Note that manual deletions of URLs in the history by the end user are not taken into account when determining the last used dates; it is merely indicative of when the regular day-to-day usage of that browser ended.

At the very top of this list is a row that represents the total amount of visited URLs in the case, regardless of location and web browser type.

Beneath the list of browser histories there is a breakdown of the visited URLs:

-

The "Top 100 visited URLs" table shows the most visited URLs, with for each URL the number of visits as indicated by the browser history.

-

The "Top 100 visited domains" table shows the most visited domains, together with the sum of the visit counts of all URLs in that domain. Subdomains are treated as independent domains.

-

The panels “Social media”, “Cloud storage”, “Webmail” and “Productivity” show the number of visits that belong to some commonly used websites, such as Facebook and Twitter for social media, DropBox and OneDrive for cloud storage, Gmail and Yahoo Mail for webmail, etc.

By default, this breakdown covers all visited URLs in the case. By clicking on a row in the list of browser histories one can narrow down on the visited URLs in that particular browser history. The selected browser is indicated by the blue URL count bar.

|

The categories and domains that are checked can be configured by editing the common-websites.xml file in the [CASEDIR]\prefs folder. |

|

During the development of this functionality we observed that the semantics of a “visited URL” may differ between browsers, possibly even between browser versions. In some cases it indicates that the user explicitly visited a URL by entering it in the browser’s address bar or by clicking a link. In other cases all resources loaded as a consequence of displaying that page may also be registered as “visited”, even resources from other domains, without making any distinction between the explicitly entered or clicked URLs on the one hand and the other resources on the other hand. One should therefore carefully look at the operation of a specific browser before drawing any final conclusions. |

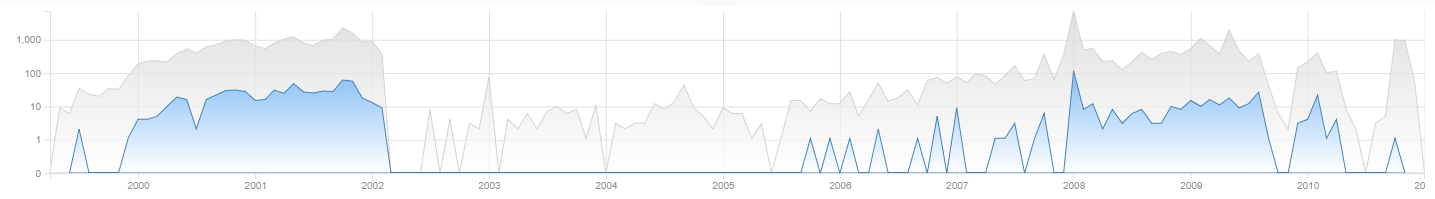

5.7. Timeline

The Timeline shows the timestamps of all items in the case over the years of months. This not only gives a rough overview of events over time, but can also be used to find data anomalies, e.g. unexpected peaks or gaps in the volume of emails, which for example may be caused by an incomplete capture of evidence files, bugs in the custodian’s software, default values entered by client software and actions of malicious custodians (resetting date fields, deleting information).

To the right of the chart are all date fields that Intella currently supports. Each date field shows the number of items that have that date field set. Date fields that do not occur in this case are disabled. (De)selecting one of the checkboxes changes the Timeline to include or exclude the counts for that date field.

This update may take some time, depending on the case size and whether a local or remote case is used. The resulting counts are cached so that afterwards the user can toggle that checkbox and see the chart change instantly. The chart can alternatively show months or years.

|

The Timeline’s time axis only shows dates between January 1 1969 and two years from “now”. This is to prevent obviously incorrect dates that have been extracted from corrupt files from spoiling the graph. |

5.8. Identities

The Identities section consists of three tables with various types of identities, which may be representing users or other entities.

The User accounts table shows a list of user accounts extracted from the evidence data. These can be:

-

Windows user accounts, extracted from Windows registry hives.

-

Skype user accounts, extracted from Skype databases. These are the database’s local account, not the entire contacts list of that account.

-

Pidgin user accounts. Again these are the local accounts, not the entire contact list.

-

User accounts in cellphone reports as produced by Cellebrite UFED, Micro Systemation XRY and the Oxygen Forensic suite. See the documentation of the respective product for details on the correct interpretation of such information.

The “Origin” column in this table shows either a machine name extracted from a Windows registry or the location of the evidence file that the account was extracted from.

The Top 10 email addresses table shows the 10 email addresses with the highest number of emails in the case. Both the raw and deduplicated counts are shown. The top 10 is based on the raw counts.

The Top 10 host names table shows the host names that have the most emails associated with them. These are essentially the host names that show up when you expand the “All Senders and Receivers” branch in the Email Address facet. Both the raw and deduplicated counts are shown. The top 10 is based on the raw counts.

5.9. Notable Registry Artifacts

The Notable Registry Artifacts (NRA) section gives insight into the most important artifacts extracted from the Windows registry hives of the investigated machines/operating systems.

A case may contain evidence files (usually in the form of disk images) that relate to multiple operating systems (OSes), simply because multiple machines may be involved, but also because a machine may have multiple operating systems installed. Hence the artifacts are grouped by OS, labeled by the “Computer Name” that was extracted from the registry, and further subdivided in a number of categories.

The following artifact types are currently extracted and reported:

-

Basic OS information

-

OS time zones

-

OS user accounts

-

Network interfaces

-

Network connections

-

USB mass storage devices that have been connected

-

Recently used files

-

Shellbags

-

Typed URLs registered by web browsers using the registry

A “registry artifact” is a logical concept in Intella Connect that is modeled as an atomic item in the case and that holds important information typically used in digital forensic investigations. This information is specially selected for this purpose by experienced forensic experts. While the properties of a registry artifact may be scattered across different registry hives and backups of these hives, Intella Connect will unify them into a coherent item.

The NRA section is divided into two parts. On the left hand side, labeled “Overview”, the tree organizing the registry artifacts is shown. The first level nodes represent OSes labeled with the “Computer Name” extracted from the registry. One lever deeper we find sub-nodes for the various registry categories (e.g. “User Accounts”), followed by leaf nodes representing the actual artifacts (e.g. a specific User Account).

One can select a leaf node in this tree, which will show the properties of that registry artifact in the Details view on the right hand side. Clicking on button "Open in previewer" in the Details view opens the registry artifact item in the Previewer.

This shows additional information such as the location of the item and allows for browsing to nearby items in the item hierarchy using the Previewer’s Tree tab.

Besides the regular registry hives, the Windows registry maintains backup files in the form of so-called “RegBack” files. Intella Connect will process these files as well and display the extracted data in the NRA section. Values coming from such backup registry hives are marked with a “RegBack” label and are only displayed when they differ from the corresponding values in the current files. Not doing so would greatly increase the amount of redundant registry information.

5.9.1. Supported registry hives

Intella Connect will process the following registry hives:

| Registry Hive Name |

Location |

|---|---|

SYSTEM |

Windows/System32/config/SYSTEM |

SYSTEM (RegBack) |

Windows/System32/config/RegBack/SYSTEM Windows/repair/SYSTEM |

NTUSER.DAT |

Found under folder Users/<user id> or Documents and Settings |

SOFTWARE |

Windows/System32/config/SOFTWARE |

SOFTWARE (RegBack) |

Windows/System32/config/RegBack/SOFTWARE Windows/repair/SOFTWARE |

SAM |

Windows/System32/config/SAM |

SAM (RegBack) |

Windows/System32/config/RegBack/SAM Windows/repair/SAM |

|

Registry artifacts can be extracted from disk images and folders only if all relevant files are located in the proper folders, e.g. Windows\System32\config\SYSTEM. |

|

Support for Windows XP and older is limited. |

5.10. Devices

The Devices section contains a list of all USB mass storage devices that have been connected to the suspect machines. This information is taken from the Notable Registry Artifacts section. It provides the ability to quickly oversee and sort all devices found in the case.

5.11. Networks

The Networks section contains a list of wired and wireless networks that a suspect machine has been connected to. This information is taken from the Notable Registry Artifacts section and from cellphone reports. It provides the ability to quickly oversee and sort all networks found in the case.

5.12. Significant Words

The Significant Words panel visualizes important words encountered in the item texts in the case, based on a statistical model of term relevance. The bigger the font of a particular word, the higher the relevance that word may have for the data set at hand.

These results are purely suggestive: though they are based on commonly used information retrieval techniques, they only look at the evidence data. In particular, they do not take the investigative research questions into account, or any investigative results such as items tagged as “relevant”.

The Paragraphs section shows statistics on the paragraphs that Intella Connect has registered, when the Analyze Paragraphs setting was set on the source(s) in the case. It lists the number of unique and duplicate paragraphs, both as raw numbers and as percentages. Furthermore, the Paragraphs marked as Seen or Unseen are counted. Finally, the number of Documents, Emails and Other item types with unique content (i.e. a paragraph that does not occur in any other item) is listed. These groups can be clicked, which shows these item sets in the Search tab.

5.13. Workflow

The Workflow section lists additional tasks that one might consider after the initial indexing is done. These tasks can further refine the case index quality and kick-start the investigation and analysis phases.

Additional Processing category:

-

The Export encrypted items link opens up the Export wizard for all items that are encrypted but have not been decrypted.

Export encrypted items list exports the metadata of these items to a CSV file.

-

The Export unprocessed items link opens up the Export wizard for all items that fall into the “Extraction Unsupported” category in the Features facet.

Export unprocessed items list exports the metadata of these items to a CSV file.

-

The Export exception items link opens up the Export wizard for all items that fall into the “Exception Items” category in the Features facet.

Search & Analysis category:

-

The Run content analysis link initiates the content analysis procedure for all items in the case. This detects person, organization and location names used in the item texts and reports them in the Content Analysis facets.

-

Add keyword list adds a keyword list to the case, for use in the Keyword Lists facet or Keywords tab in the Statistics view.

-

Add MD5 list adds an MD5 or message hash list, for use in the MD5 and Message Hash facet.

-

Add saved search adds a saved search obtained from another case to this case, for use in the Saved Searches facet and Keywords tab in the Statistics view.

Report category:

There are currently no tasks available in this category.

6. Keyword search

To search for some text, select "Text Search" facet, enter a query in the textbox and click the magnifier icon button.

|

If a query is more complex and takes more time to evaluate, then refreshing the page or closing the browser tab during this evaluation will cause the query to be cancelled and will disappear from results list. |

For query syntax rules, please see the Search query syntax section below.

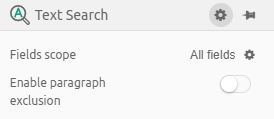

6.1. Search options

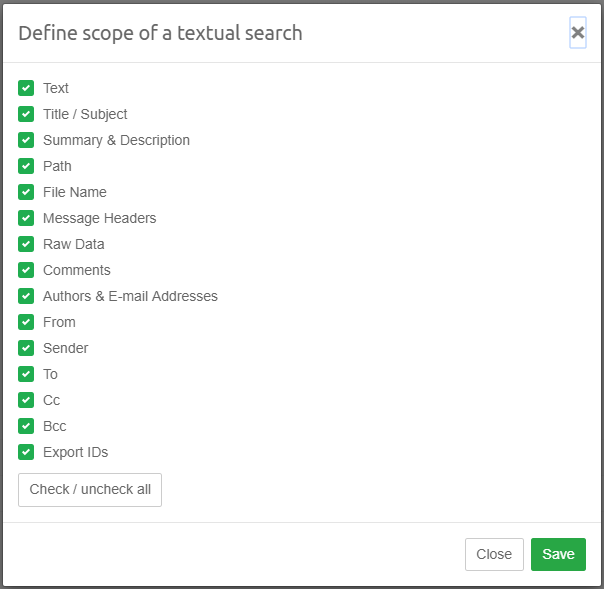

Clicking on gear icon next to Text Search title will show the following options:

-

Fields scope

-

Enable paragraph exclusion

With search options you can limit keyword searching to specific item parts or attributes:

-

Text

-

Title / Subject

-

Summary & Description

-

Path (= folder and file name)

-

File name

-

Message Headers

-

Raw Data (e.g. low-level data from PST files, MS Office documents, vCards)

-

Comments

-

Authors & E-mail Addresses

-

Each of the From, Sender, To, Cc and Bcc fields separately

-

Export IDs (searches in the export IDs of the items that are part of any export set)

To see the search options, click on Fields scope option or gear icon next to it. The options box will be displayed as a popup window.

Select the properties that you want to include in your search and deselect those you want to exclude. Your selected search options will be stored and used for future searches until the time you change them again.

|

As a reminder, a warning label will be shown when not all options are selected. |

Enable paragraph exclusion switch is used to exclude paragraphs marked for exclusion as described in the

Previewing results

section.

6.2. Search query syntax

In the text field of the Search panel you can use a special query syntax to perform complex multi-term queries and use other advanced search features.

6.2.1. Use of multiple terms (AND/OR operators)

By default, a query containing multiple terms matches with items that contain all terms anywhere in the item. For example, searching for:

John Johnson

returns all items that contain both “John” and “Johnson.” There is no need to add an AND (or “&&”) as searches are performed as such already, however doing so will not negatively affect your search.

If you want to find items containing at least one term but not necessarily both, use one of the following queries:

John OR Johnson

John || Johnson

6.2.2. Minus sign (NOT operator)

The NOT operator excludes items that contain the term after NOT:

John NOT Johnson

John -Johnson

Both queries return items that contain the word “John” and not the word “Johnson.”

John -“John goes home”

This returns all items with “John” in it, excluding items that contain the phrase “John goes home.” The NOT operator cannot be used with a single term. For example, the following queries will return no results:

NOT John

NOT “John Johnson”

6.2.3. Phrase search

To search for a certain phrase (a list of words appearing right after each other and in that particular order), enter the phrase within full quotes in the search field:

“John goes home”

will match with the text “John goes home after work” but will not match the text “John goes back home after work.” Phrase searches also support the use of nested wildcards, e.g.

“John* goes home”

will match both “John goes home” and “Johnny goes home”.

6.2.4. Grouping

You can use parentheses to control how your Boolean queries are evaluated:

(desktop OR server) AND application

retrieves all items that contain “desktop” and/or “server,” as well as the term “application.”

6.2.5. Single and multiple character wildcard searches

To perform a single character wildcard search you can use the “?” symbol. To perform a multiple character wildcard search you can use the “*” symbol.

To search for “next” or “nest,” use:

ne?t

To search for “text”, “texts” or “texting” use:

text*

The “?” wildcard matches with exactly one character. The “*” wildcard matches zero or more characters.

6.2.6. Fuzzy search

Intella Connect supports fuzzy queries, i.e., queries that roughly match the entered terms. For a fuzzy search, you use the tilde (“~”) symbol at the end of a single term:

roam~

returns items containing terms like “foam,” “roams,” “room,” etc.

The required similarity can be controlled with an optional numeric parameter. The value is between 0 and 1, with a value closer to 1 resulting in only terms with a higher similarity matching the specified term. The parameter is specified like this:

roam~0.8

The default value of this parameter is 0.5.

6.2.7. Proximity search

Intella supports finding items based on words or phrases that are within a specified maximum distance from each other in the items text. This is a generalization of a phrase search.

To do a proximity search you place a tilde (“~”) symbol at the end of a phrase, followed by the maximum word distance:

"desktop application"~10

returns items with these two words in it at a maximum of 10 words distance.

It is possible to mix individual words, wildcards and phrases in proximity queries. The phrases must be enclosed in single quotes (' ') in this case:

"'desktop application' 'user manual'"~10

Nested proximity searches are also possible:

"'desktop application'~2 'user manual'~4"~10

| Nested phrase and proximity queries are always use single quotes. Using regular double quotes for them will cause a syntax error. Only one level of nesting is possible. |

6.2.8. Field-specific search

Intella’s Keyword Search searches in document texts, titles, paths, etc. By default, all these types of text are searched through. You can override this globally by deselecting some of the fields in the Options, or for an individual search by entering the field name in your search.

title:intella

returns all items that contain the word “intella” in their title.

The following field names are available:

-

text - searches in the item text

-

title - searches in titles and subjects

-

path - searches in file and folder names and locations

-

filename - searches in file names only

-

summary - searches in descriptions, metadata keywords, etc.

-

agent – searches in authors, contributors and email senders and receivers

-

from – searches in email From fields

-

sender – searches in email Sender fields

-

to – searches in email To fields

-

cc – searches in email Cc fields

-

bcc – searches in email Bcc fields

-

headers - searches in the raw email headers

-

rawdata - searches in raw document metadata

-

comment - searches in all comments made by reviewer(s)

-

export - searches in the export IDs of the items that are part of any export set

You can mix the use of various fields in a single query:

intella agent:john

searches for all items containing the word “intella” (in one of the fields selected in the Options) that have “john” in their author metadata or email senders and receivers.

6.2.9. Tokenization and Special characters

Tokenization underlies the keyword search functionality in Intella. It is the process of dividing texts into primitive searchable fragments, known as "tokens" or "terms". Each token makes a separate entry in the text index, pointing to all items containing this token. Keyword search works by finding matches between the tokens in the user’s query and in the index. Therefore, for effective keyword search, it is vital to have a basic understanding of how tokenization works in Intella.

Tokenization employs different algorithms, but in the most common case it is simply splitting the text around specific characters known as "token delimiters". These delimiters include spaces, punctuation symbols, and other non-alphabetic characters, to produce tokens close to the natural language words.

A side effect of this method is that it is impossible to search for words together with the token delimiters. If these characters are met in the user query, they play their delimiting role, thus being handled the same as simple spaces. This is rarely a problem, although it should be taken into account when doing a keyword search.

| To search for exact text fragments, including all punctuation and special characters, the Content Analysis functions can be used (see the section on the Content Analysis facet for details). |

| A list of all search tokens, generated for an item, can be seen in the "Words" tab of the Previewer window. |

There is no specific support for the handling of diacritics. E.g., characters like é and ç will be indexed and displayed, but these characters will not match with 'a' and 'c' in full-text queries. A workaround can be to replace such characters with the '?' wildcard.

The following characters have special meaning in the query syntax and may cause an error message if not used in accordance to the syntax rules:

+ - && || ! ( ) { } [ ] ^ " ~ * ? : / \

To prevent the syntax errors, these characters need to be escaped by the preceding \ character. Please note that if the character is classified as a token delimiter, then escaping it in the query will not make it searchable.

6.2.10. Regular Expressions

This release contains experimental support for searching with regular expressions. This may be extended, refined and documented in a future release. For now, please visit http://lucene.apache.org/core/4_3_0/queryparser/org/apache/lucene/queryparser/classic/package-summary.html#Regexp_Searches for more information.

Be aware that these regular expressions are evaluated on the terms index, not on the entire document text as a single string of characters! Your search expressions should therefore take the tokenization of the text into account.

7. Using facets

Besides keyword searching, the indexed items can be browsed using facets, which represent specific item properties.

Every facet organizes the items into groups (possibly hierarchical) depending on a specific item property.

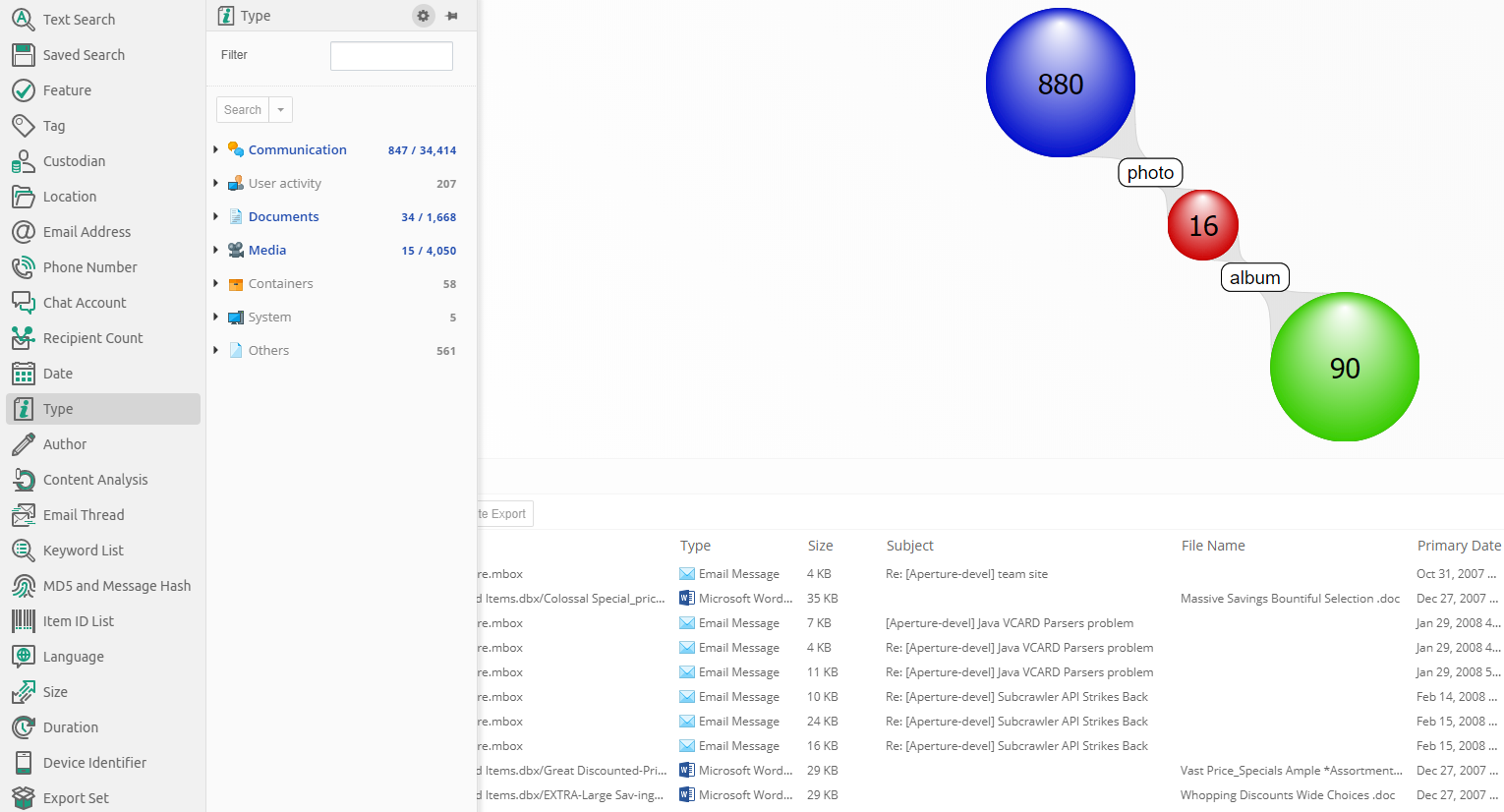

Clicking on a facet in the facets panel will open a list of all values of the selected facet on the right side, next to the list and it will overlay portion of cluster map / geolocation view and table view. In the example below, the Type facet has a list of file types as values.

If a query is selected in searches panel or cluster map and items corresponding to that query are present in facet entry within facet list, then that facet entry will be highlighted in blue and the amount shown in that facet entry will be two numbers separated by slash. The first number is amount of items in selected query and second number is total amount of items in case for given entry of facet. For example, below screenshot shows that in selected query there are 34 documents out of 1,668 documents present in case.

If a faced is overlayed, then clicking elsewhere than on facet list or facet itself will cause the overlayed facet to hide. To ensure that a facet is displayed at all times, you can click on pin button which will cause it to no longer overlay portion of cluster map / geolocation view and table view, but will instead take up the space and shift cluster map / geolocation view and table view to the remaining space. Pinning first facet will also cause it to stay in place and clicking on any other facet will open the clicked facet on top and move the pinned facet below the clicked facet.

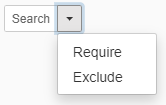

To search for items that match a facet value, select the value and click the Search button on top of the values.

The facet panels can be resized and reordered, to better accommodate the user’s workflow.

| It is possible to select more than one facet value at a time by holding down the Ctrl key when clicking on the facet values. |

| Some facets have additional options which can by hidden or shown by clicking on the gear icon next to the pin icon. |

7.1. Available facets

7.1.1. Saved Searches

The Saved Searches is a list of previous sets of searches that the user has stored.

When search results are displayed in the Cluster Map and the Searches list, the Save button beneath the Searches list will be shown.

When the user clicks this button, a dialog opens that lets the user enter a name for the saved search. After clicking on the OK button, the chosen name will appear in the list in the Saved Searches facet.

| Predefined Saved Search called 'Possible spam' is added to every newly created case. It can be found under "Default searches" branch. |

Click on the name of the saved search and then on the Restore button to bring the Cluster Map and the Searches list back into the state it had when the Save option was used.

Additional options are shown or hidden when using the gear icon:

The "Replace current results" checkbox controls what happens with the currently displayed searches when you restore a saved search. When turned on, the Cluster Map and Searches list will be emptied first. When selected, the contents of the saved search will be appended to them.

When the 'Combine queries' checkbox is selected, searches contained in the selected saved search will be combined to search for items matching any of the contained searches (Boolean OR operator). The items will be returned as a single set of results (one cluster).

7.1.2. Features

The Features facet allows you to identify items that fall in certain special purpose categories:

-

Encrypted: all items that are encrypted. Example: password-protected PDF documents. When you select this category and click the Search button, you will be shown all items that are encrypted.

Sometimes files inside an encrypted ZIP file are visible without entering a password, but a password still needs to be entered to extract the file. Such files cannot be exported by Intella Connect if the password has not been provided prior to indexing. In this case both the ZIP file and its encrypted entries will be marked as Encrypted, so searching for all encrypted items and exporting those will capture the parent ZIP file as well. -

Decrypted: all items in the Encrypted category that Intella Connect was able to decrypt using the specified access credentials.

-

Unread: all emails, SMS/MMS, chat messages and conversations that are marked as "unread" in the source file. Note that this status is not related to previewing in Intella Connect.

This property is only available for PST and OST emails and some cellphone dumps. If the Unread property is not set, it could mean that either the item was not read or that the property is not available for this item. Some tools allow the user to reset a message’s unread status, so even when the flag is set, it cannot be said with certainty that the message has not been read. -

Empty documents: all items that have no text while text was expected. Example: a PDF file containing only images.

-

Has Duplicates: all items that have a copy in the case, i.e. an item with the same MD5 or message hash.

-

Has Shadow Copies: all items that have another version located in a shadow copy volume.

-

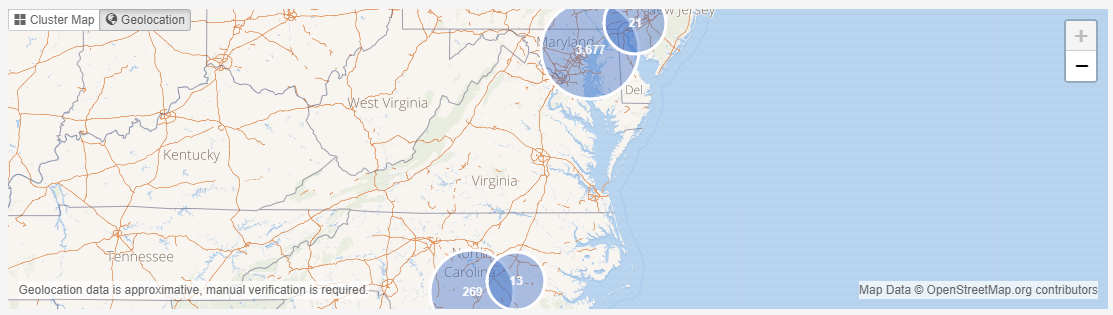

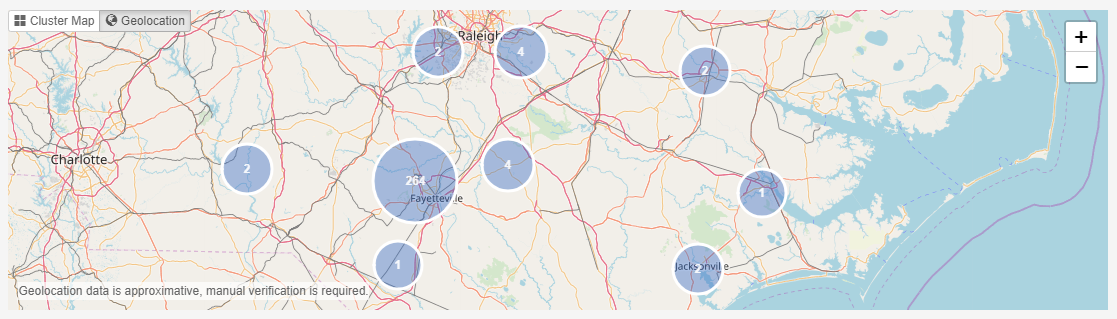

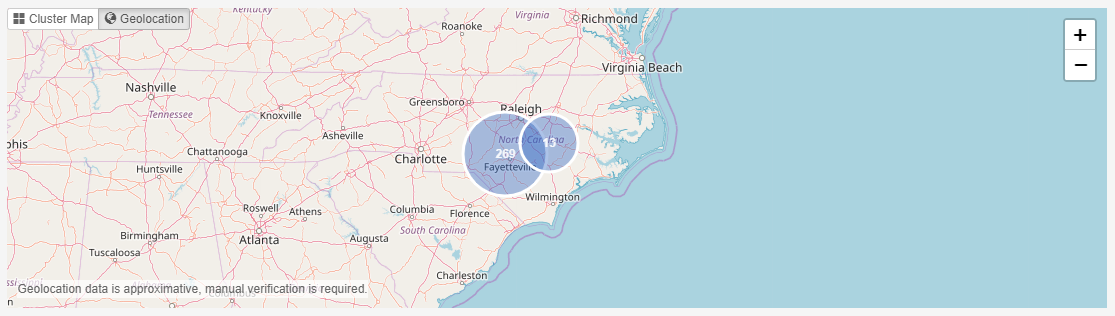

Has Geolocation: indicates whether a geolocation has been associated with the item, either as part of the original metadata or through an IP geolocation lookup.

-

Downloaded from Internet: indicates items that might have been downloaded from the Internet. Intella determines such items by looking at Zone.Identifier alternate stream in NTFS disk images. Where possible, Intella can extract the URL where the file was downloaded from. It can be found in Raw Data tab.

-

OCRed: indicates whether the item has been OCRed after indexing.

-

Has Imported Text: all items that have text imported using importText option in Intella Command-line interface.

-

Content Analyzed: all items for which the Content Analysis procedure has been applied.

-

Images Analyzed: all items for which the Image Analysis procedure has been applied.

-

Exception items: all items that experienced processing errors during indexing. This has six subcategories:

-

Unprocessable items: the data cannot be processed because it is corrupt, malformed or not understood by the processor. Retrying will most likely result in the same result.

-

I/O errors: the processing failed due to I/O errors. The processing might succeed in a repeated processing attempt.

-

Decryption failures: the data cannot be processed because it is encrypted and a matching decryption key is not available. The processing might succeed in a repeated processing attempt when the required decryption key is supplied.

-

Timeout errors: the processing took too long and was aborted. See more details on how to configure crawler timeout in "Memory, crawler count and timeout settings" chapter.

-

Truncated text: The document text was not fully processed due to one of the following reasons:

-

The document text was larger than the imposed document text limit and any additional text was ignored. See the Sources section for a description of this limit and how to alter it.

-

Binary content was removed from the document text. Intella will try to detect and remove so-called binary content from all processed text to reduce memory usage when processing corrupt or recovered files. It includes any control and non-printable characters that are not normally present in regular texts. Items with binary content removed will have error description: "Binary content detected".

-

Full item text could not be extracted because the format is not fully supported yet.

-

-

Out of memory errors: the processing failed due to a lack of memory.

-

Processing errors: the processing failed due to a problem/bug in the processor. The description should contain the stack trace.

-

Crawler crash errors: the processing failed due to a crawler crash. This is a more severe error compared to the Processing Error type. When it occurs, Intella will also reject all items that are related to crashed item (e.g. PST file and all of the emails that it contains). More details about why the crawler crashed can usually be found in a hs_err_pid_XYZ.log file which is located in the case logs folder (one file per crash). Crawler crashes will not affect other items and the case integrity.

-

-

Extraction Unsupported: all items that are larger than zero bytes, whose type could be identified by Intella Connect, are not encrypted, but for which Intella Connect does not support content extraction. An example would be AutoCAD files: we detect this image type but do not support extraction any content out of it.

-

Text Fragments Extracted: indicates whether heuristic string extraction has been applied on a (typically unrecognized or unsupported) binary item.

-

Irrelevant: all items that fall into one of the categories below and that themselves are considered to be of little relevance to a review (as opposed to their child items):

-

Folders

-

Email containers (PST, NSF, Mbox, …)

-

Disk images (E01, L01, DD, …)

-

Cellphone reports (UFDR, XRY XML, …)

-

Archives (ZIP, RAR, …)

-

Executables (EXE, BAT, …)

-

Load files (DII, DAT, …)

-

Empty (zero byte) file

-

Embedded images - defined below

-

-

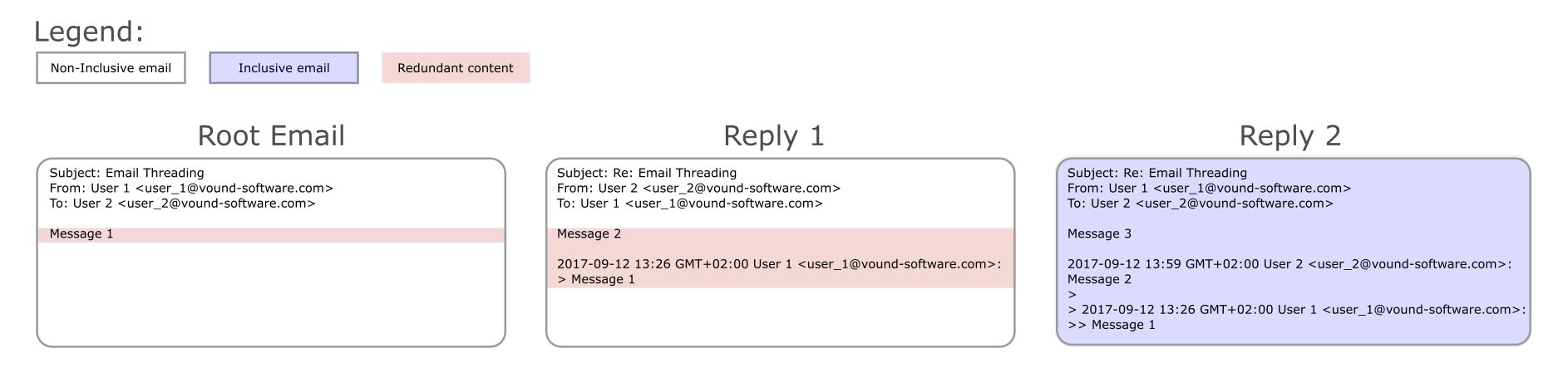

Threaded: all items that have been subjected to email threading processing and that were subsequently assigned to a thread (see the Email Thread facet). Subtypes:

-

Inclusive: all email items marked as inclusive.

-

Non-Inclusive: all email items marked as non-inclusive.

-

Missing Email Referent: Indicates that the threading process has detected that the email item is a reply to another email or a forwarded email, but the email that was replied to or that has been forwarded is not available in the case.

-

-

Recovered: all items that were deleted from a PST, NSF, EDB, disk image, cellphone report or cloud source or volume shadow copy and that Intella Connect could still (partially) recover. The items recovered from PST, NSF and EDB files are the items that appear in the artificial "<RECOVERED>" and "<ORPHAN ITEMS>" folders of these files in the Location facet. The items recovered from volume shadow copies are located in the artificial "<Volume Shadow Copies>" folder of the parent volume in the Location facet. The Recovered branch in the Features facet has the following sub-branches, based on the recovery type and the container type:

-

Recovered from PST.

-

Orphan from EDB.

-

Orphan from NSF.

-

Orphan from PST.

-

Recovered from cellphone.

-

Recovered file metadata from disk image.

-

Recovered entire file content from disk image.

-

Recovered partial file content from disk image.

-

Recovered from cloud source.

-

Recovered from volume shadow copy.

-

-

Attached: all items that are attached to an email. Only the direct attachments are reported; any items nested in these attachments are not classified as Attachment.

-

Has attachments: all emails, documents and user activities that have other items attached to it. Note that it does NOT include embedded images.

-

Embedded Images: all items that have been extracted from a document, spreadsheet or presentation.

-

Tagged: all items that are tagged.

-

Flagged: all items that are flagged.

-

Batched: all items that are assigned to at least one batch

-

Commented: all items that have a comment made by a reviewer.

-

Previewed: all items that have been opened in Intella’s Previewer.

-

Opened: all items that have been opened in their native application.

-

Exported: all items that have been exported.

-

Redaction: all items that have been subject to one of the redaction procedures. See the section on Redaction for more information.

-

Redacted: all items that have one or more parts blacked out due to redactions. Items on which the Redact function has been used but in which no parts have actually been marked as redacted are not included in this category.

-

Queued for Redaction: all items that have their Queued for Redaction checkbox selected. These will turn to Redacted once the user performs the Process Redaction Queue function on them.

-

Missing keyword hits: all items that had a redaction issue when Process Redaction Queue was invoked.

-

-

Top-Level Parent: all items that are the top-level parent. Top-level parents are determined per the Show Parents settings, configurable with desktop versions of Intella.

-

W4 Delta: new items found by Intella in imported W4 cases.

-

All items: all items (non-deduplicated) in the entire case.

Additional option is shown or hidden when using the gear icon:

Filter can be used to quickly find relevant feature entry from the list by a potion of feature name.

| In cases in which multiple reviewers have been active, i.e. shared cases or cases with imported Work Reports, the Previewed, Opened, Exported, Commented, Tagged, Flagged and Redacted nodes shown in the Facet panel will have sub-nodes, one node for each user. |

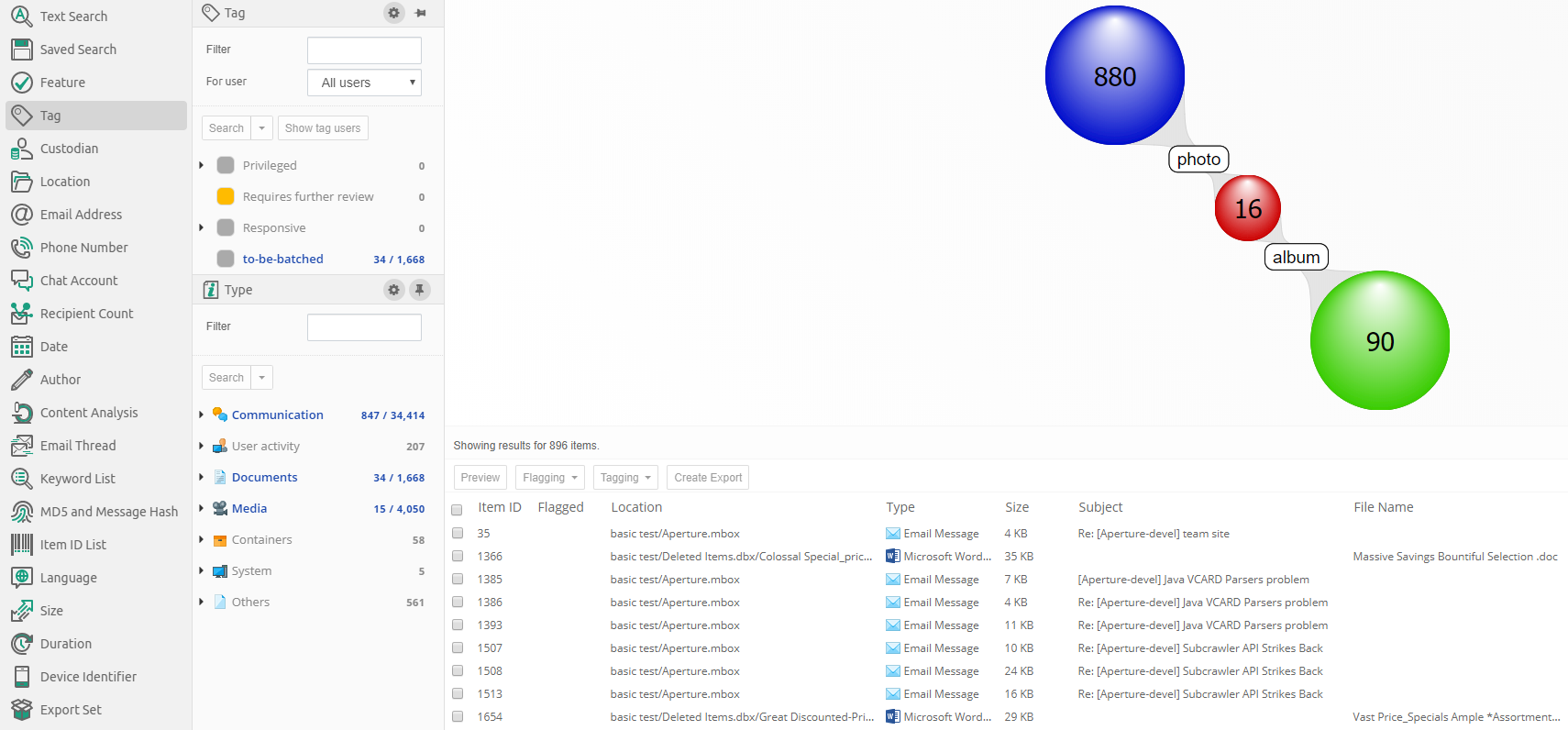

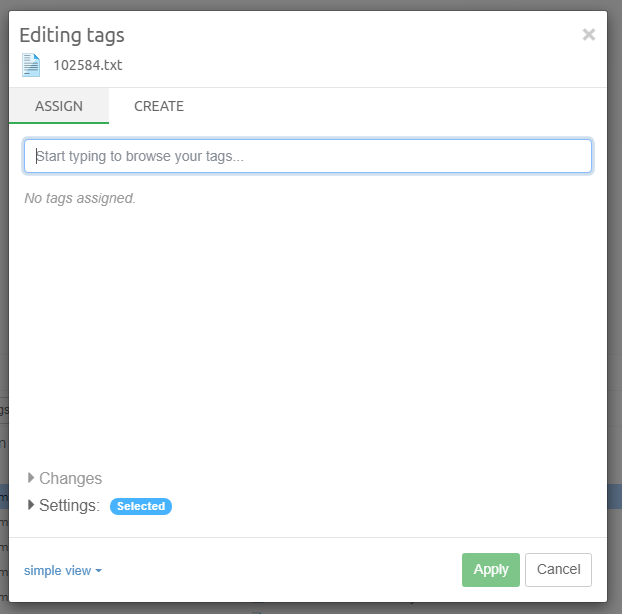

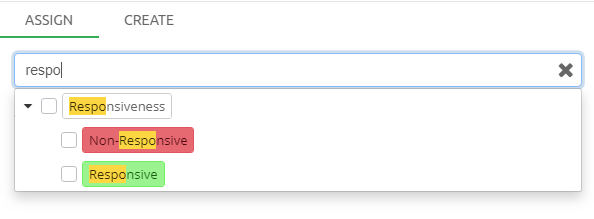

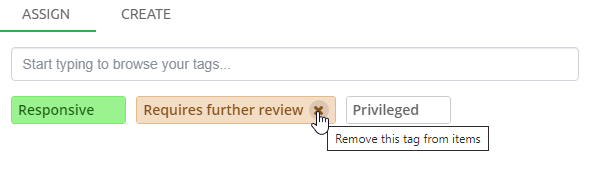

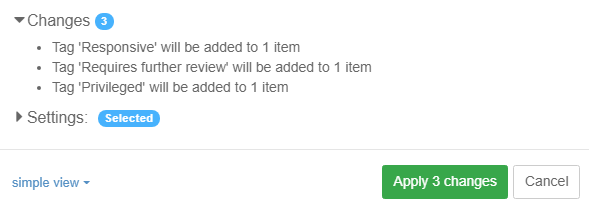

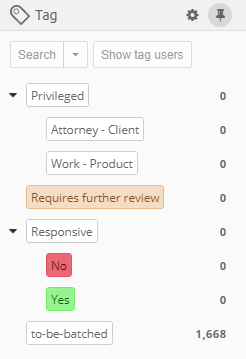

7.1.3. Tags

Tags are labels defined by the user to group individual items. Typically

used tags in an example are for example "relevant", "not relevant" and

"legally privileged". Tags are added to items by right-clicking in the

Details panel and choosing the Add or edit tags… menu option. Tags

can also be added in the Previewer or by applying a Coding decision to

an item via Coding Form.

To search for all items with a certain tag, select the tag from the Tags list and click the Search button above the list.

When clicking on options button (gear icon), then the Tags facet panel will have the following options:

-

filter which allows the user to filter the tags list by a potion of tag name.

-

a drop-down list at the top, listing the names of all reviewers that have been active in this case. You can use this list to filter the tags list for taggings made by a selected reviewer only. Note that tags with zero taggings from selected user will not be shown when using user filtering.

Select the "All users" option to show taggings from all users as well as tags with zero taggings.

If the same tag has been used by different reviewers, their names and the numbers of tagged items are displayed in modal which shows tagging breakdown per user when clicked on "Show user tags" button.

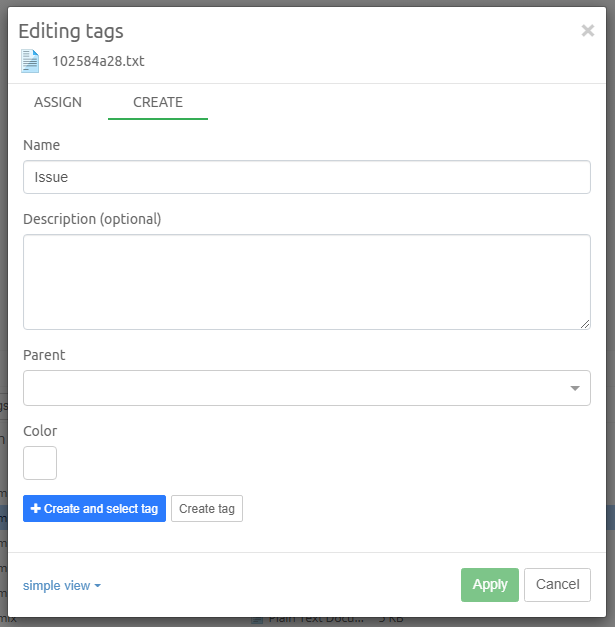

The tags can be organized into a hierarchical system by the creation of sub-tags within an existing (parent) tag group. You can create a sub-tag, from "Add or edit tags…" dialog by specifying a parent tag in a drop down list.

To rename a tag or change the tag description, select the tag in the facet and choose "Edit…" in the context menu which appears after clicking on more options icon (3 dots icon).

|

When a tag is renamed, all items associated with this tag will be assigned the new tag name automatically. However, some operations that depend on specific tag names (such as indexing tasks with the Tag condition) may need to be corrected manually. |

To delete a tag, select it in the facet and choose "Delete…" in the context menu which appears after clicking on more options icon (3 dots icon). This might require a special permission being assigned to your user.

To see a pivot report showing how many items were tagged by each user,

select some tags and click on the Show tag users button. A modal dialog

will appear showing selected tags in separate rows and users contributing

to particular tag in columns.

|

This feature is disabled if you select a tag coming from a sub-case. |

7.1.4. Identities

The Identities facet makes it possible to query for all items linked to an identity. An identity query combines the results of the queries for the individual email addresses, phone numbers and chat accounts into a single item set. The result is a holistic view of the communication of that person, regardless of the media and aliases used for that communication.

In case of email addresses, an Identity query also finds items where the email address occurs in the item text. It therefore casts a wider net than merely looking at senders and receivers.

7.1.5. Custodians

Custodians are assigned to items to indicate the owner from whom an evidence item was obtained. The "Custodians" facet lists all custodian names in the current case and allows searching for all items with a certain attribute value. Custodian name attributes are assigned to items either automatically (as part of post-processing) or manually in the Details panel. To assign a custodian to items selected in the Details panel, use the "Set Custodian…" option in the right-click menu.

To remove custodian information from selected items, choose the "Clear Custodian…" option.

To delete a custodian name from the case and clear the custodian attribute in all associated items, select the value in the facet panel and choose "Delete" in the context menu which appears after clicking on more options icon (3 dots icon).

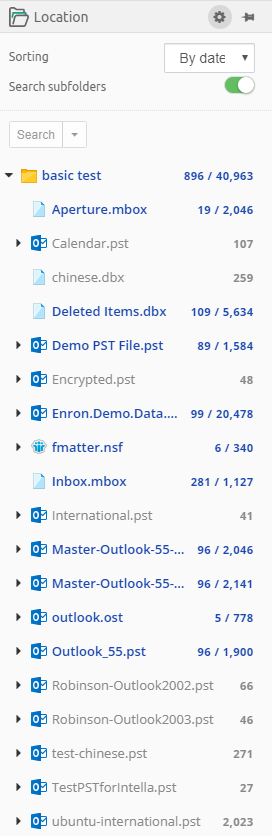

7.1.6. Location

This facet represents the folder structure inside your sources. Select a folder and click Search to find all items in that folder.

Additional options are shown or hidden when using the gear icon:

-

Sorting - allows to sort the root entries either by name or by date when the source containing that root entry was added.

-

Search subfolders - the selected folder, all items in that folder, and all items nested in subfolders will be returned, i.e. all items in that entire sub-tree.

When "Search subfolders" is not selected, only the items nested in that folder will be returned. Items nested in subfolders will not be returned, nor will the selected folder itself be returned.

When your case consists of a single indexed folder, then the Location tree will show a single root representing this folder. Selecting this root node and clicking Search with "Search subfolders" switched on will therefore return all items in your case.

When your case consists of multiple mail files that have been added separately, e.g. by using the PST and NSF source types in the New Source wizard, then each of these files will be represented by a separate top-level node in the Location tree.

By default Location facet will expand all root sources so that their children are immediately visible. This behavior can be changed using Facets .

7.1.7. Email Address

This facet represents the names of persons involved in sending and receiving emails. The names are grouped in ten categories:

-

From

-

Sender

-

To

-

Cc

-

Bcc

-

Addresses in Text

-

All Senders (From, Sender)

-

All Receivers (To, Cc, Bcc)

-

All Senders and Receivers

-

All Addresses

When clicking on options button (gear icon), then the facet provides filtering option by above mentioned categories.

Most emails typically only have a From header, not a Sender. The Sender header is often used in the context of mailing lists. When a list server forwards a mail sent to a mailing list to all subscribers of that mailing list, the message send out to the subscribers usually has a From header representing the conceptual sender (the author of the message) and a Sender header representing the list server sending the message to the subscriber on behalf of the author.

7.1.8. Phone Number

This facet lists phone numbers observed in phone calls from cellphone reports as well as phone numbers listed in PST contacts and vCard files.

The "incoming" and "outgoing" branches are specific to phone calls. The "All Phone Numbers" branch combines all of the above contexts.

When clicking on options button (gear icon), then the facet provides filtering option by above mentioned categories.

7.1.9. Chat Account

This facet lists chat accounts used to send or receive chat messages, such as Skype and WhatsApp account IDs. Phone numbers used for SMS and MMS messages are also included in this facet.

When clicking on options button (gear icon), then the facet provides filtering option by above mentioned categories.

7.1.10. Recipient Count

This facet lets the user search on recipient count ranges by entering the type and the number of recipients (minimum and maximum). The following recipient types are supported:

-

All Recipients: all email, chat, and cellphone recipients.

-

Visible Recipients: visible email, chat, and cellphone recipients (To, Cc).

-

Blind Recipients: blind carbon copy email recipients (Bcc).

When clicking on options button (gear icon), then the facet provides filtering option by above mentioned categories.

7.1.11. Date

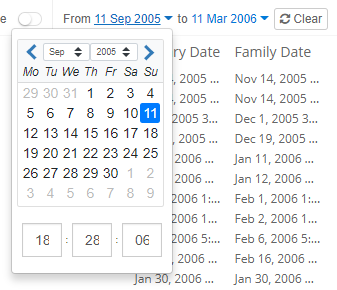

This facet lets the user search on date ranges by entering a From and To date. Please note that the date entered in the To field is considered part of the date range.

Besides start and end dates, Intella Connect lets the user control which date attribute(s) are used:

-

Sent (e.g. all e-mail items)

-

Received (e.g. all e-mail items)

-

File Last Modified (e.g. file items)

-

File Last Accessed (e.g. file items)

-

File Created (e.g. file items)

-

Content Created (e.g. file items and e-mail items from PST files)

-

Content Last Modified (e.g. file items and e-mail items from PST files)

-

Primary Date

-

Family Date

-

Last Printed (e.g. documents)

-

Called (e.g. phone calls)

-

Start Date (e.g. meetings)

-

End Date (e.g. meetings)

-

Due Date (e.g. tasks)

All fields can be de/selected with "Check / uncheck all" checkbox.

The Date facet will only show the types of dates that actually occur in the evidence data of the current case.

Furthermore it is possible to narrow the search to only specific days or specific hours. This makes it possible to e.g. search for items sent outside of regular office hours.

Primary and Family dates

While processing the dates of all items, Intella Connect will try to pick a matching date rule based on the item’s type and use it to determine the Primary Date attribute for that item. The rules affecting this process are configurable with desktop versions of Intella and currently cannot be changed in Intella Connect. Reindexing the case or modifying rules used to compute Primary Dates may also affect values of Family Date attribute for items, as those two attributes are tightly related. To learn more about those attributes please refer to Details panel section of the manual.

7.1.12. Type

This facet represents the file types (Microsoft Word, PDF, JPEG, etc.), organized into categories (Communication, Documents, Media etc.) and in some cases further into subcategories. To refine your query with a specific file type, select a type from the list and click the Search button.

Note that you can search for both specific document types like PNG Images, but also for the entire Image category.

Empty (zero byte) files are classified as "Empty files" in the "Others branch".

Additional option is shown or hidden when using the gear icon:

Filter can be used to quickly find relevant type entry from the list by a potion of type name.

7.1.13. Author

This facet represents the name(s) of the person(s) involved in the creation of documents. The names are grouped into two categories:

-

Creator

-

Contributor

When clicking on options button (gear icon), then the facet provides filtering option by above mentioned categories.

To refine your query by a specific creator or contributor name, select the name and click the Search button.

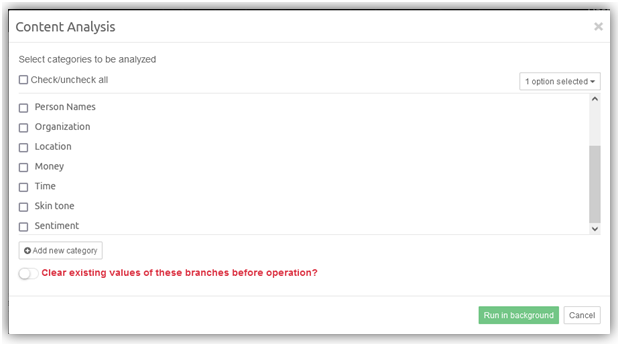

7.1.14. Content Analysis

The Content Analysis facet allows you to search items based on specific types of entities that have been found in the textual content of these items. Three of the categories in this facet are populated automatically during indexing and are available immediately afterwards. These are:

-

Credit card numbers

-

Social security numbers (SSNs)

-

Phone numbers

The other categories are more computationally expensive to calculate and therefore require an explicitly triggered post-processing step. These categories are:

-

Person names

-

Organizations (e.g. company names)

-

Locations (e.g. city and country names)

-

Monetary amounts

-

Time (words and phrases related to the hours, minutes, weekdays, dates, etc.)

-

Sentiment analysis (sub-categories such as Negative, Neutral and Positive based on the text in documents or messages)

-

Custom regular expressions (for searching e.g. bank account numbers, patent numbers and other types of codes that can be formally described as a regular expression)

When opening the facet you will be presented with all available Content Analysis categories. After clicking on a category, the panel will slide and values matching this category will be presented in a form of a table. You can query for a specific value using the Search button at the top, or by double clicking on one of the rows. Clicking on the Back button will present a list of categories again.

To learn more about how to conduct Content Analysis please refer to section Content Analysis .

7.1.15. Email Thread

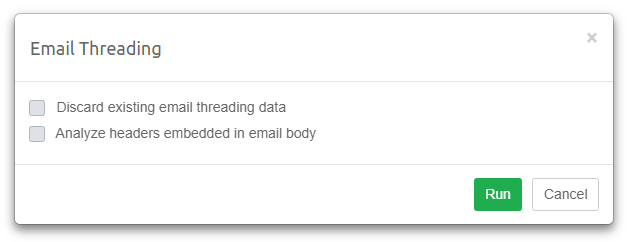

In the Email Thread facet you can search for emails based on the email thread identified by the email threading procedure. To populate this facet, a user needs to perform the email threading procedure on a selected set of items. Please see the Email Threading section for instructions.

Additional options are shown or hidden when using the gear icon:

Be default, all threads containing only a single email are hidden from view, as they can greatly increase the length of the list and are typically of little use. To include these threads in the list, disable the “Hide threads with one email” switch.

Email threads shown in this facet can be sorted by name, item count or node count. The difference between item and node is that items can be counted in details table and nodes are counted in email thread tab in previewer. Thread can have different item and node count if some items are duplicated in that thread.

7.1.16. Near-duplicates

This facet lists all item groups identified by the last near-duplicates analysis. To populate this facet, a user needs to perform the near-duplicates analysis procedure on a selected set of items. Please see the Administrator’s manual > Near-duplicates Analysis section for instructions.

The names of near-duplicate groups are derived from titles of their master items. Searching for a group produces a set of items which include a master item and its near-duplicates with similarity scores within the threshold specified for near-duplicates analysis. Additionally, after expanding facet options you can sort near-duplicate groups either by the name (default) or the group size.

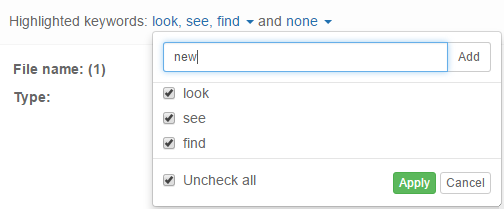

7.1.17. Keyword Lists

In the Keyword Lists facet you can load a keyword list, to automate the searching with sets of previously determined search terms.

A keyword list is a text file in UTF-8 encoding that contains one search term per line. Note that a search term can also be a combination of search terms, like "Paris AND Lyon".

Once loaded, all available keywords lists are shown in the Keyword Lists

facet. They are now available for search. You can also explore searches

defined in each list by hovering the mouse over it and selecting Explore…

from the contextual menu.

Additional options are shown or hidden when using the gear icon:

When the 'Combine queries' checkbox is selected, multiple keywords selected from a specific keyword list will be combined to search for items matching any of the selected terms (Boolean OR operator). The items will be returned as a single set of results (one cluster). If the checkbox is not selected, the selected terms will be searched separately, resulting in as many result sets as there are selected queries in the list.

The other two options displayed here are working the same as in context of a regular Text Search and are used to limit the scope of your query to selected fields or to enable paragraphs exclusion.

|

Selected options are affecting searches triggered from this facet as well as item counts displayed when exploring contents of a list. |

|

Keyword lists can be used to share search terms between investigators. |

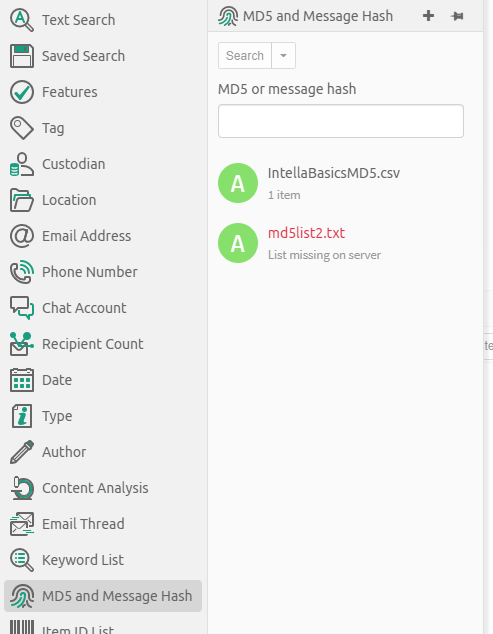

7.1.18. MD5 and Message Hash

Intella can calculate MD5 and message hashes to check the uniqueness of files and messages. If two files have the same MD5 hash, Intella considers them to be duplicates. Similarly, two emails or SMS messages with the same message hash are considered to be duplicates. With the MD5 and Message Hash facet you can:

-

Find items with a specific MD5 or message hash and

-

Find items that match with a list of MD5 and message hashes.

Specific MD5 or message hash

You can use Intella Connect to search for files that have a specific MD5 or message hash. To do so, enter the hash (32 hexadecimal digits) in the field and click the Search button.

List of MD5 or message hashes

The hash list feature allows you to search the entire case for MD5 and message hash values from an imported list. Create a text file (.txt) with one hash value per line. Use the Add… button in the MD5 Hash facet to add the list. Select the imported text file in the panel and click the Search button below the panel. The items that match with the MD5 or message hashes in the imported list will be returned as a single set of results (one cluster).

Structured vs Legacy message hash

In Intella 2.2.2 a more flexible algorithm for calculating message hashes has been introduced: structured message hashes. Cases that have been created with Intella 2.2.2 or newer will use the structured message hashes by default. Cases that have been created with older versions will keep using the old algorithm until the case is fully re-indexed. That re-index is required to calculate the Body Hash, one of the four components of structured message hashes, for applicable items. The algorithm for message hashes cannot be configured in Intella Connect - this needs to be done in desktop version of Intella.

Structured message hash

The structured message hash exists of four components: Header, Recipients, Body, and Attachments. By default, the calculated message hash will be based on all four components, but you can deselect any of these to make deduplication of message items less strict. For example, when the Recipients component is deselected, an email with a Bcc header will be considered as a duplicate of an email without that header (assuming all other components are equal).

For email items, the following data is included in the four components of a structured message hash:

-

Header – The sender, subject and sent date.

-

Recipients – The To, Cc and Bcc header values.

-

Body – The email’s text body.

-

Attachments – The combined MD5 hashes of all email attachments.

All upper case/lower case differences of textual data is ignored, and for the email body all whitespace and formatting characters (Unicode categories C and Z) are ignored too. The sent date is rounded down to full minutes. For attachments that are embedded emails, the structured message hash of that email is used, instead of the MD5 hash.

|

When deduplicating a set of items, Intella Connect will select the item that has the lowest item ID for each set of duplicates. This item may be missing specific details that are present in duplicates. This effect becomes more likely when a less strict message hash configuration is used. |

Legacy message hash

The message hash is calculated by calculating the MD5 hash of a list of concatenated item properties. For emails the following properties are used:

-

From, Sender, To, Cc and Bcc headers.

-

Subject header.

-

Date header.

-

Email body.

-

All other MIME parts (attachments, nested messages, signatures, etc.).

For SMS, MMS, and other types of chat messages such as Skype and WhatsApp messages, the following parts are used:

-

The sender information.

-

The receiver information.

-

The textual content of the message.

When certain headers/properties occur multiple times, all occurrences are used.

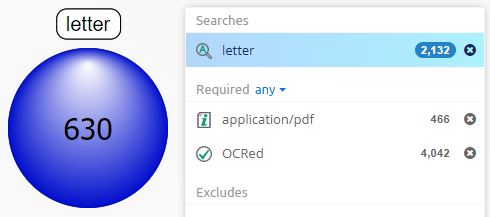

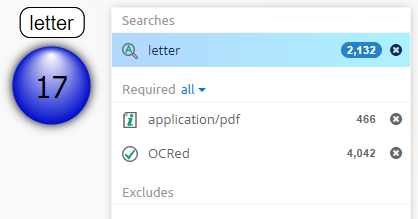

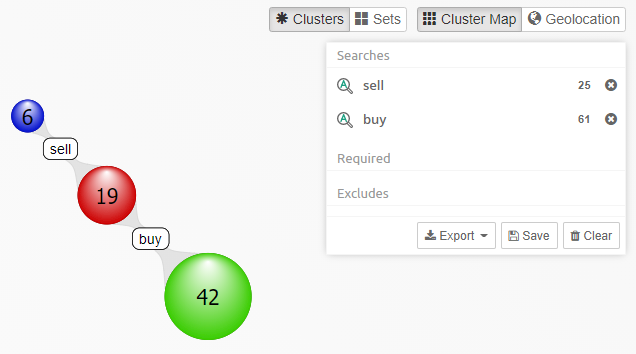

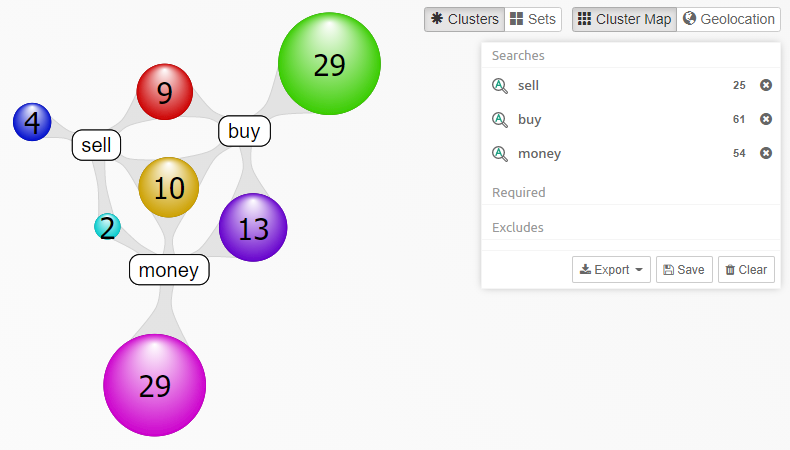

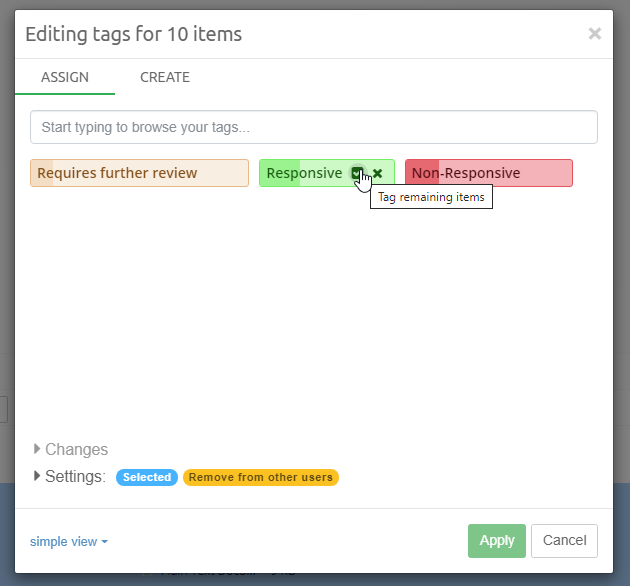

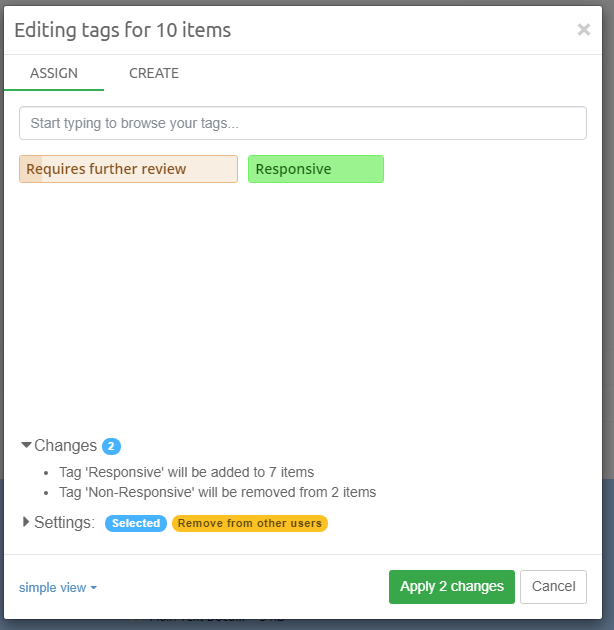

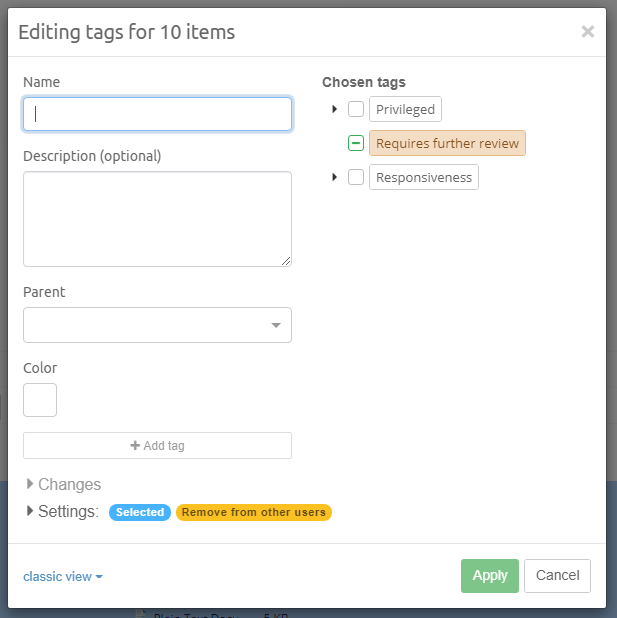

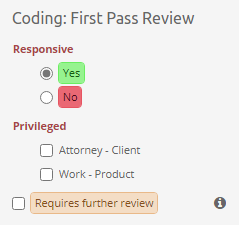

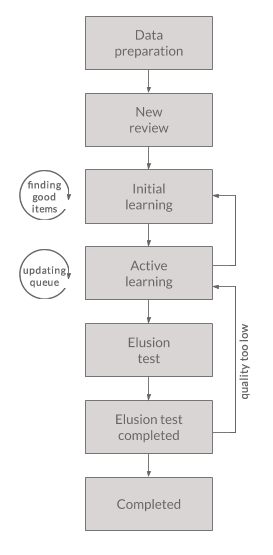

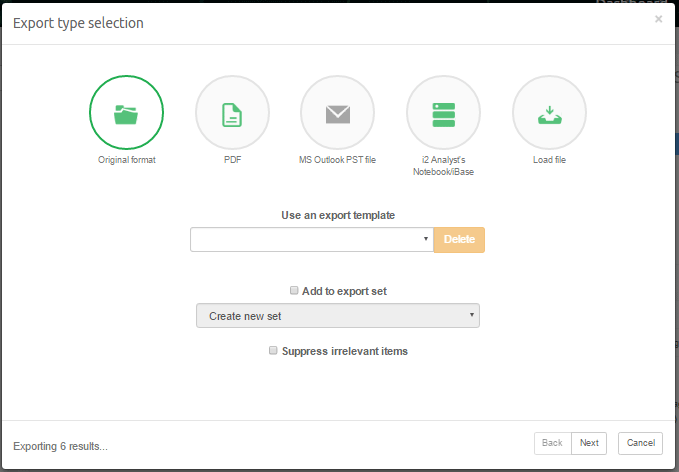

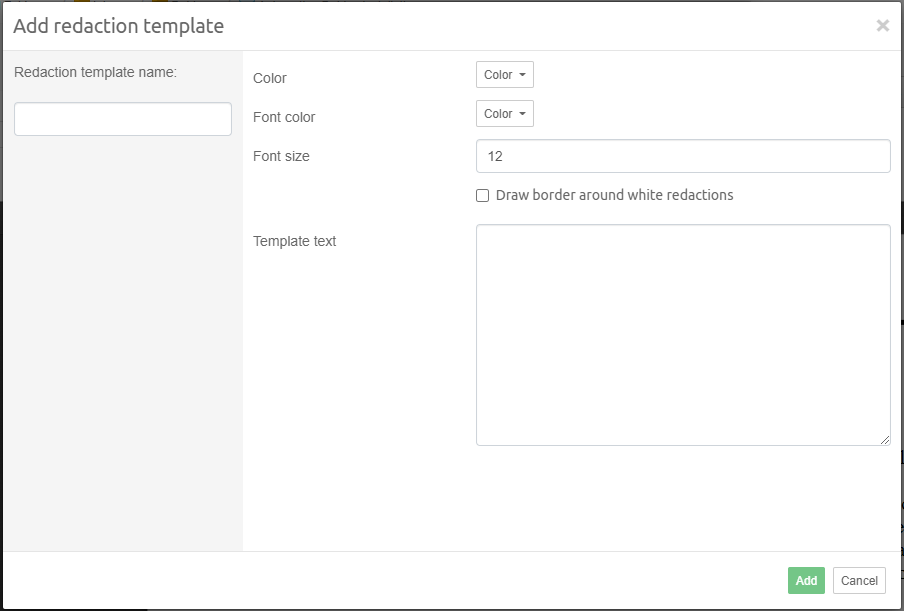

A difference between email message hashes and chat message hashes is that the hashing procedure for emails will simply skip missing values, whereas for chat messages all fields need to be present to calculate a hash.